Google’s video intelligence API is a powerful tool for video analysis. There are many different ways that we can leverage its power to deliver more productivity. In this article, we will be exploring its Face Detection feature. We will be analyzing videos stored on Cloudinary using the Google video intelligence API and drawing bounding boxes over detected faces.

Here’s a glimpse of what we will be going through in this article.

-

Log into Cloudinary and obtain API credentials needed to access their API

-

Log in to the Google Cloud Platform and obtain credentials that will allow us to make calls to the API.

-

Upload videos to Cloudinary storage.

-

Analyze uploaded video using Google’s video intelligence API.

-

Show the video on a webpage.

-

Extract annotations from the Google video intelligence analysis.

-

Use HTML Canvas API to draw bounding boxes over annotated faces.

The final project can be viewed on Codesandbox.

You can find the full source code on my Github repository.

This article assumes that you have already installed Node.js and NPM on your development environment and also have a code editor ready. Check out the official Node.js website if you have not yet installed Node. You will also need a video to analyze. This can be any video with people’s faces in it. For the sake of this article, I have included one here

Cloudinary is an amazing service that offers a wide range of solutions for dealing with Media storage and Optimization. You can get started with their API for free immediately.

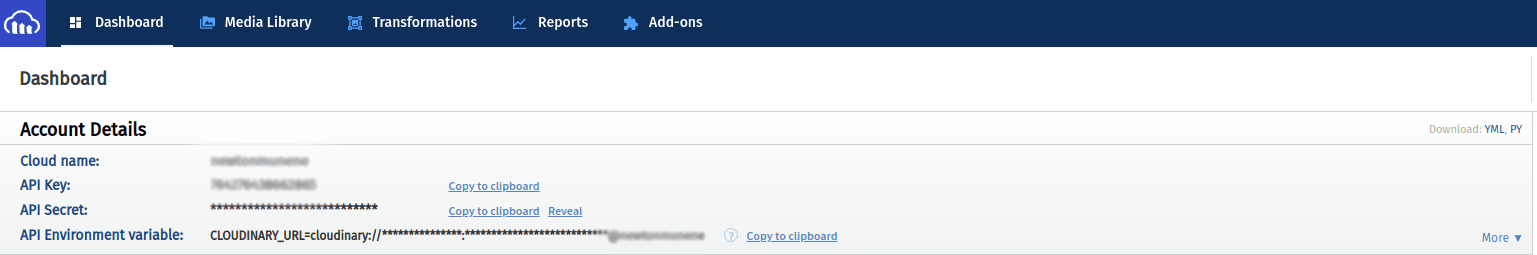

Head over to Cloudinary and sign in or sign up for a free account. Navigate to your cloudinary console and take note of your Cloud name API Key and API Secret. These will be displayed on the top left of the console page.

Google has a wide range of APIs. These are all part of the GCP and you will need a GCP project to access any one of them. Let’s see how we can do that now. You might find this a bit overwhelming, especially if it’s your first time. Don’t be hesitant though, I will walk you through it.

There’s a brief explanation on how to get started on the quickstart guide page. Create an account if you do not already have one then navigate to the project selector page. You will need to select an existing project or create a new one. The next step is to ensure that billing is activated for the selected project. Don’t worry though, you won’t be charged for accessing the APIs immediately. Most of the APIs, including the Video Intelligence one, have a free tier with a monthly limit. As long as you’re within these limits you will not be charged. Even though it is highly unlikely to exceed those limits on a development environment, you should use the API sparingly. Confirm that billing is enabled.

Our project is now ready to use. We need to enable the APIs that we will be using. In our case, this is the Video Intelligence API. Once that is enabled, proceed to create a service account. This is what we will use to authenticate our application with the API. Make your way to the Create a new service account page and select the project you created earlier.

You will be required to provide a sensible name for your service account. Give it an appropriate name. For this article, I used the name face-tracking-with-next-js.

Leave the other defaults and go-ahead to create the service account. When you navigate back to the service accounts dashboard, your new service account will now be listed. Under the more actions button, click on Manage keys.

Click on Add key and then on Create new key

In the pop-up dialog, make sure to choose the JSON option.

Once you’re done, a .json file will be downloaded to your computer. Take note of this file’s location as we will be using it later.

This article assumes you are familiar with Next js basics. Let’s create a new project. Open your terminal and run the following command in your desired project folder

npx create-next-app

This will scaffold a basic Next.js project for you. For more advanced installation options, please take a look at the official docs. Give your project an appropriate name like face-tracking-with-next-js. Finally, you can change the directory into your new project and we can begin the coding.

cd face-tracking-with-next-js

Code language: JavaScript (javascript)Let’s install a few dependencies first. We will need the cloudinary NPM package.

npm install --save cloudinary

Create a folder at the root of your project and name it lib. Inside this folder create a new file named cloudinary.js. Inside this file, we will initialize the Cloudinary SDK and create a function to upload videos. Paste the following inside lib/cloudinary.js

// lib/cloudinary.js

// Import the v2 api and rename it to cloudinary

import { v2 as cloudinary } from "cloudinary";

// Initialize the SDK with cloud_name, api_key, and api_secret

cloudinary.config({

cloud_name: process.env.CLOUD_NAME,

api_key: process.env.API_KEY,

api_secret: process.env.API_SECRET,

});

export const handleCloudinaryUpload = (path) => {

// Create and return a new Promise

return new Promise((resolve, reject) => {

// Use the SDK to upload media

cloudinary.uploader.upload(

path,

{

// Folder to store video in

folder: "videos/",

// Type of resource

resource_type: "video",

},

(error, result) => {

if (error) {

// Reject the promise with an error if any

return reject(error);

}

// Resolve the promise with a successful result

return resolve(result);

}

);

});

};

Code language: JavaScript (javascript)At the top of the file, we import the v2 API from the package we just installed and we rename it to cloudinary. This is just for readability and you can leave it as v2. Just remember to change it when we use it in our code. We then proceed to initialize the SDK by calling the config method and passing to it the cloud_name, api_key, and api_secret. We also have a handleCloudinaryUpload function that will handle the uploads for us. We pass in a path to the file that we want to upload and then call the upload method on the SDK and pass in the path and a few options. Read more about the upload media api and options you can pass from the official documentation. We then resolve or reject a promise depending on the success or failure of the upload.

One thing you might notice is that we’ve assigned our cloud_name, api_key, and api_secret to environment variables that we have not created yet. Let us create those now.

Create a new file called .env.local at the root of your project. Paste the following inside this file.

CLOUD_NAME=YOUR_CLOUD_NAME

API_KEY=YOUR_API_KEY

API_SECRET=YOUR_API_SECRET

Make sure to replace YOUR_CLOUD_NAME YOUR_API_KEY and YOUR_API_SECRET with the appropriate values that we got from the Obtaining Cloudinary API Keys section above.

Read more about Environment Variables with Next.js here

Now that we have that in place, let’s create an API route where we can post our video and upload it to cloudinary. Read more about Next js api routes on the official docs if you’re not familiar with this.

Create a new file called videos.js inside the pages/api/ folder. Paste the following code inside the file :

// pages/api/videos.js

// Next.js API route support: https://nextjs.org/docs/api-routes/introduction

import { promises as fs } from "fs";

import { annotateVideoWithLabels } from "../../lib/google";

import { handleCloudinaryUpload } from "../../lib/cloudinary";

const videosController = async (req, res) => {

// Check the incoming HTTP method. Handle the POST request method and reject the rest.

switch (req.method) {

// Handle the POST request method

case "POST": {

try {

const result = await handlePostRequest();

// Respond to the request with a status code 201(Created)

return res.status(201).json({

message: "Success",

result,

});

} catch (error) {

// In case of an error, respond to the request with a status code 400(Bad Request)

return res.status(400).json({

message: "Error",

error,

});

}

}

// Reject other HTTP methods with a status code 405

default: {

return res.status(405).json({ message: "Method Not Allowed" });

}

}

};

const handlePostRequest = async () => {

// Path to the file you want to upload

const pathToFile = "public/videos/people.mp4";

// Upload your file to cloudinary

const uploadResult = await handleCloudinaryUpload(pathToFile);

// Read the file using fs. This results in a Buffer

const file = await fs.readFile(pathToFile);

// Convert the file to a base64 string in preparation for analyzing the video with google's video intelligence api

const inputContent = file.toString("base64");

// Analyze the video using Google's video intelligence api

const annotations = await annotateVideoWithLabels(inputContent);

// Return an object with the cloudinary upload result and the video analysis result

return { uploadResult, annotations };

};

export default videosController;

Code language: JavaScript (javascript)Let’s go over this. We’re defining a function that will handle the HTTP requests. This is all covered in the official docs. Inside this method, we’re switching the request methods and assigning the POST request a handler method called handlePostRequest. We’re then returning a failure response for all other methods since we will not be using them for this tutorial.

In the handlePostRequest function, we define a path to the file we want to upload. For the brevity and simplicity of this tutorial, we’re having a static path to a locally stored file. This is the path stored under the variable pathToFile. You can change this path to point to your video. In the real world, you would want to have the user select a video on their computer and post it to the backend, then upload that file to cloudinary.

We then delegate the actual upload to cloudinary to the handleCloudinaryUpload function that we created earlier inside the lib/cloudinary.js file. Once the upload is complete, we want to analyze that same video using Google Video Intelligence. We delegate that to a function called annotateVideoWithLabels that we haven’t created yet. We will do that shortly. We then return the upload result and also the video analysis result. What we’re missing at this point is the annotateVideoWithLabels function. Let’s create that.

First things first, we need to install the needed dependency, The video intelligence NPM package

npm install --save @google-cloud/video-intelligence

Code language: CSS (css)Once we have that we need to define a few environment variables that we can reference in our code. Open the .env.local file that we created earlier and add the below the existing variables.

GCP_PROJECT_ID=YOUR_GCP_PROJECT_ID

GCP_PRIVATE_KEY=YOUR_GCP_PRIVATE_KEY

GCP_CLIENT_EMAIL=YOUR_GCP_CLIENT_EMAIL

Open the .json file we downloaded in the Creating a Google Cloud Platform project and obtaining credentials section in a text editor. Inside the credentials.json file, you will find the appropriate values for project id, private key, and client email. Replace YOUR_GCP_PROJECT_ID,YOUR_GCP_PRIVATE_KEY and YOUR_GCP_CLIENT_EMAIL with the appropriate values from the .json file.

Careful with that file. Do not commit it into version control since anyone with this file can be able to access your GCP project.

Next, create a file called google.js under the lib/ folder. Paste the following code inside this file.

// lib/google.js

import {

VideoIntelligenceServiceClient,

protos,

} from "@google-cloud/video-intelligence";

const client = new VideoIntelligenceServiceClient({

// Google cloud platform project id

projectId: process.env.GCP_PROJECT_ID,

credentials: {

client_email: process.env.GCP_CLIENT_EMAIL,

private_key: process.env.GCP_PRIVATE_KEY.replace(/\\n/gm, "\n"),

},

});

/**

*

* @param {string | Uint8Array} inputContent

* @returns {Promise<protos.google.cloud.videointelligence.v1.VideoAnnotationResults>}

*/

export const annotateVideoWithLabels = async (inputContent) => {

// Grab the operation using array destructuring. The operation is the first object in the array.

const [operation] = await client.annotateVideo({

// Input content

inputContent: inputContent,

// Video Intelligence features

features: ["FACE_DETECTION"],

// Options for context of the video being analyzed

videoContext: {

// Options for the label detection feature

faceDetectionConfig: {

includeBoundingBoxes: true,

includeAttributes: true,

},

},

});

const [operationResult] = await operation.promise();

// Gets annotations for video

const [annotations] = operationResult.annotationResults;

return annotations;

};

Code language: JavaScript (javascript)We first import VideoIntelligenceServiceClient from the package we just installed and create a new client. The client takes in the project id and a credentials object containing the client’s email and private key. There are many different ways of authenticating Google APIs. Have a read in the official documentation. To learn more about the method that we have used above take a look at these github docs. The reason we use this approach is to avoid having to ship our application with the sensitive .json file we downloaded.

After we have a client ready we proceed to define the annotateVideoWithLabels function, which will handle the video analysis. We pass a string or a buffer array to the function and then call the client’s annotateVideo method with a few options. The official documentation contains more information on this. Allow me to touch on a few of the options.

-

inputContent– This is a base64 string or buffer array of your video file. If your video is hosted on Google cloud storage, you’ll want to use theinputUrifield instead. Unfortunately, only Google cloud storage URLs are supported. Otherwise, you will have to use theinputContent. -

features– This is an array of the Video intelligence features that should be run on the video. Read more in the documentation. For this tutorial, we only need theFACE_DETECTIONfeature which identifies people’s faces. -

videoContext.faceDetectionConfig.includeBoundingBoxes– This instructs the analyzer to include bounding boxes/coordinates for where the faces are located in the frame -

videoContext.faceDetectionConfig.includeAttributes– This instructs the analyzer to include facial attributes e.g. smiling, wearing glasses, e.t.c. We won’t really be using this for the tutorial but it’s still a nice addition to have.

We wait for the operation to complete by calling promise() on the operation and awaiting for the Promise to complete. We then get the operation result using Javascript’s destructuring. To understand the structure of the resulting data, take a look at the official documentation. The structure might look like so :

{

annotationResults: [

{

segment: {

startTimeOffset: {},

endTimeOffset: {

seconds: string,

nanos: number,

},

},

faceDetectionAnnotations: [

{

tracks: [

{

segment: {

startTimeOffset: {

seconds: string,

nanos: number,

},

endTimeOffset: {

seconds: string,

nanos: number,

},

},

timestampedObjects: [

{

normalizedBoundingBox: {

top: number,

right: number,

bottom: number,

},

timeOffset: {

seconds: string,

nanos: number,

},

attributes: [

{

name: string,

confidence: number,

},

],

},

],

attributes: [

{

name: string,

confidence: number,

},

],

confidence: number,

},

],

thumbnail: string,

version: string,

},

],

},

],

}

We’ll only need the first item in the array. Again we use Javascript’s ES6 destructuring to get the first element in the array. With this, we have all we need to upload the video to cloudinary and analyze it for faces. Now we need to show the video on the client side.

Open pages/index.js and replace it with the following code.

import Head from "next/head";

import Image from "next/image";

import { useRef, useState, MutableRefObject } from "react";

export default function Home() {

/**

* This stores a reference to the video HTML Element

* @type {MutableRefObject<HTMLVideoElement>}

*/

const playerRef = useRef(null);

/**

* This stores a reference to the HTML Canvas

* @type {MutableRefObject<HTMLCanvasElement>}

*/

const canvasRef = useRef(null);

const [video, setVideo] = useState();

const [loading, setLoading] = useState(false);

const handleUploadVideo = async () => {

try {

// Set loading to true

setLoading(true);

// Make a POST request to the `api/videos/` endpoint

const response = await fetch("/api/videos", {

method: "post",

});

const data = await response.json();

// Check if the response is successful

if (response.status >= 200 && response.status < 300) {

const result = data.result;

// Update our videos state with the results

setVideo(result);

} else {

throw data;

}

} catch (error) {

// TODO: Handle error

console.error(error);

} finally {

setLoading(false);

// Set loading to true once a response is available

}

};

return [

<div key="main div">

<Head>

<title>Face Tracking Using Google Video Intelligence</title>

<meta

name="description"

content="Face Tracking Using Google Video Intelligence"

/>

<link rel="icon" href="/favicon.ico" />

</Head>

<header>

<h1>Face Tracking Using Google Video Intelligence</h1>

</header>

<main className="container">

<div className="wrapper">

<div className="actions">

<button onClick={handleUploadVideo} disabled={loading}>

Upload

</button>

</div>

<hr />

{loading

? [

<div className="loading" key="loading div">

Please be patient as the video uploads...

</div>,

<hr key="loading div break" />,

]

: null}

{video ? (

<div className="video-wrapper">

<div className="video-container">

<video

width={1000}

height={500}

src={video.uploadResult.secure_url}

ref={playerRef}

onTimeUpdate={onTimeUpdate}

></video>

<canvas ref={canvasRef} height={500} width={1000}></canvas>

<div className="controls">

<button

onClick={() => {

playerRef.current.play();

}}

>

Play

</button>

<button

onClick={() => {

playerRef.current.pause();

}}

>

Pause

</button>

</div>

</div>

<div className="thumbnails-wrapper">

Thumbnails

<div className="thumbnails">

{video.annotations.faceDetectionAnnotations.map(

(annotation, annotationIndex) => {

return (

<div

className="thumbnail"

key={`annotation${annotationIndex}`}

>

<Image

className="thumbnail-image"

src={`data:image/jpg;base64,${annotation.thumbnail}`}

alt="Thumbnail"

layout="fill"

></Image>

</div>

);

}

)}

</div>

</div>

</div>

) : (

<div className="no-videos">

No video yet. Get started by clicking on upload above

</div>

)}

</div>

</main>

</div>,

];

}

Code language: JavaScript (javascript)This is just a basic React component but let’s go over what’s happening. Inside our component, we use useRef hooks to store a reference to our HTML video element and another to a Canvas element, playerRef and canvasRef respectively. Next, we have useState hooks that store the state for our video upload result and another for the loading/uploading state. We then define a handleUploadVideo function that will post the video to the /api/videos endpoint so that it can be uploaded to cloudinary and analyzed. Remember that we’re only using a locally stored file. In a real-world application, you would instead want to handle the form submission and post the selected video file. Moving on to the HTML, we have an upload button that will trigger the handleUploadVideo, a div that only shows when the loading state is true, and a div that will hold our video and canvas elements when the video state contains data. Make sure to give the video and canvas elements width and height otherwise you might run into some weird issues with the canvas API. The canvas element is placed above the video element on the Z plane. This means that it also covers the native video controls and we won’t be able to play or pause the video. We get around this by adding our own play and pause buttons above the Canvas. Finally, we have a div that shows thumbnails of all the detected faces.

We have our video and analysis results ready. Now, all we need to do is figure out where the faces are and draw boxes over them. How do we do that? The HTML video element has an ontimeupdate event that we can listen to. Using this, whenever the video’s current time is updated, we iterate over our detected faces and check to see if the time on the detected face matches the current time of the video. If it does, we get the bounding box coordinates and use them to draw on the canvas. There are a couple of different ways you could do this to improve performance. One that comes to mind, you could iterate over detected faces and then listen to the ontimeupdate event and draw for each face instead of drawing for all faces at once. Let’s go with the former.

You will notice that in the HTML that we wrote in the pages/index.js, our video element has an onTimeUpdate event listener but we haven’t yet defined the handler.

{/*...*/}

<video

// ...

onTimeUpdate={onTimeUpdate}

></video>

{/*...*/}

Code language: HTML, XML (xml)Let’s do that now. Add the following function to our Home component in pages/index.js just above the handleUploadVide function.

const onTimeUpdate = (ev) => {

// Video element scroll height and scroll width. We use the scroll height and width instead of the video height and width because we want to ensure the dimensions match the canvas elements.

const videoHeight = playerRef.current.scrollHeight;

const videoWidth = playerRef.current.scrollWidth;

// Get the 2d canvas context

const ctx = canvasRef.current.getContext("2d");

// Whenever the video time updates make sure to clear any drawings on the canvas

ctx.clearRect(0, 0, videoWidth, videoHeight);

// The video's current time

const currentTime = playerRef.current.currentTime;

// Iterate over detected faces

for (const annotation of video.annotations.faceDetectionAnnotations) {

// Each detected face may have different tracks

for (const track of annotation.tracks) {

// Get the timestamps for all bounding boxes

for (const face of track.timestampedObjects) {

// Get the timestamp in seconds

const timestamp =

parseInt(face.timeOffset.seconds ?? 0) +

(face.timeOffset.nanos ?? 0) / 1000000000;

// Check if the timestamp and video's current time match. We convert them to fixed-point notations of 1 decimal place

if (timestamp.toFixed(1) == currentTime.toFixed(1)) {

// Get the x coordinate of the origin of the bounding box

const x = (face.normalizedBoundingBox.left || 0) * videoWidth;

// Get the y coordinate of the origin of the bounding box

const y = (face.normalizedBoundingBox.top || 0) * videoHeight;

// Get the width of the bounding box

const width =

((face.normalizedBoundingBox.right || 0) -

(face.normalizedBoundingBox.left || 0)) *

videoWidth;

// Get the height of the bounding box

const height =

((face.normalizedBoundingBox.bottom || 0) -

(face.normalizedBoundingBox.top || 0)) *

videoHeight;

ctx.lineWidth = 4;

ctx.strokeStyle = "#800080";

ctx.strokeRect(x, y, width, height);

}

}

}

}

};

Code language: JavaScript (javascript)Let’s go over that. This function will run every time the video’s time updates. We first get the video element’s height and width. Remember we’re getting the element’s width and height and not the actual videos. This is important because we need these dimensions to match those of the canvas that is placed above the video element. Next, we get the 2d context of the canvas. This is where the drawing will happen. We also want to clear the context to prepare it for new drawings and finally get the current time of the video. Once we have all these, we iterate over the detected faces, their tracks, and then the timestamped objects for each face. The timestamped object contains the time offset where the face is in relation to the video time and also bounding boxes with left, right, top, bottom coordinates in relation to the video’s x and y planes. Read about this here. A timestamp is an object with seconds and nanos fields. We first need to convert the nanoseconds to seconds and add up the total time in seconds. Next, we convert the timestamp(in seconds) and the current video time to fixed-point notations of 1 decimal place and check if they match. If they match, this means that the face is in the current frame of the video and we should draw a bounding box over it. We convert them to 1 decimal place so that we can get a rough estimate instead of a precise point in time. Depending on the frame rate of the video, if we use a precise point in time, the timestamp and the video’s time may never match.

Once we know that a face is in the frame, we get the x and y coordinates of the face, and also the width and height of the face’s bounding box. These values are in relation to the video’s width and height, so we multiply with the video width for the left and right values and the video height for the top and bottom values. See this for more info. We finally proceed to set line width and a stroke style and draw a rectangle on the canvas using the coordinates/dimensions we just got.

And there we have it. Here’s the full code for pages/index.js

// pages/index.jsx

import Head from "next/head";

import Image from "next/image";

import { useRef, useState, MutableRefObject } from "react";

export default function Home() {

/**

* @type {MutableRefObject<HTMLVideoElement>}

*/

const playerRef = useRef(null);

/**

* @type {MutableRefObject<HTMLCanvasElement>}

*/

const canvasRef = useRef(null);

const [video, setVideo] = useState();

const [loading, setLoading] = useState(false);

const onTimeUpdate = (ev) => {

// Video element scroll height and scroll width. We use the scroll height and width instead of the video height and width because we want to ensure the dimensions match the canvas elements.

const videoHeight = playerRef.current.scrollHeight;

const videoWidth = playerRef.current.scrollWidth;

// Get the 2d canvas context

const ctx = canvasRef.current.getContext("2d");

// Whenever the video time updates make sure to clear any drawings on the canvas

ctx.clearRect(0, 0, videoWidth, videoHeight);

// The video's current time

const currentTime = playerRef.current.currentTime;

// Iterate over detected faces

for (const annotation of video.annotations.faceDetectionAnnotations) {

// Each detected face may have different tracks

for (const track of annotation.tracks) {

// Get the timestamps for all bounding boxes

for (const face of track.timestampedObjects) {

// Get the timestamp in seconds

const timestamp =

parseInt(face.timeOffset.seconds ?? 0) +

(face.timeOffset.nanos ?? 0) / 1000000000;

// Check if the timestamp and video's current time match. We convert them to fixed-point notations of 1 decimal place

if (timestamp.toFixed(1) == currentTime.toFixed(1)) {

// Get the x coordinate of the origin of the bounding box

const x = (face.normalizedBoundingBox.left || 0) * videoWidth;

// Get the y coordinate of the origin of the bounding box

const y = (face.normalizedBoundingBox.top || 0) * videoHeight;

// Get the width of the bounding box

const width =

((face.normalizedBoundingBox.right || 0) -

(face.normalizedBoundingBox.left || 0)) *

videoWidth;

// Get the height of the bounding box

const height =

((face.normalizedBoundingBox.bottom || 0) -

(face.normalizedBoundingBox.top || 0)) *

videoHeight;

ctx.lineWidth = 4;

ctx.strokeStyle = "#800080";

ctx.strokeRect(x, y, width, height);

}

}

}

}

};

const handleUploadVideo = async () => {

try {

// Set loading to true

setLoading(true);

// Make a POST request to the `api/videos/` endpoint

const response = await fetch("/api/videos", {

method: "post",

});

const data = await response.json();

// Check if the response is successful

if (response.status >= 200 && response.status < 300) {

const result = data.result;

// Update our videos state with the results

setVideo(result);

} else {

throw data;

}

} catch (error) {

// TODO: Handle error

console.error(error);

} finally {

setLoading(false);

// Set loading to true once a response is available

}

};

return [

<div key="main div">

<Head>

<title>Face Tracking Using Google Video Intelligence</title>

<meta

name="description"

content="Face Tracking Using Google Video Intelligence"

/>

<link rel="icon" href="/favicon.ico" />

</Head>

<header>

<h1>Face Tracking Using Google Video Intelligence</h1>

</header>

<main className="container">

<div className="wrapper">

<div className="actions">

<button onClick={handleUploadVideo} disabled={loading}>

Upload

</button>

</div>

<hr />

{loading

? [

<div className="loading" key="loading div">

Please be patient as the video uploads...

</div>,

<hr key="loading div break" />,

]

: null}

{video ? (

<div className="video-wrapper">

<div className="video-container">

<video

width={1000}

height={500}

src={video.uploadResult.secure_url}

ref={playerRef}

onTimeUpdate={onTimeUpdate}

></video>

<canvas ref={canvasRef} height={500} width={1000}></canvas>

<div className="controls">

<button

onClick={() => {

playerRef.current.play();

}}

>

Play

</button>

<button

onClick={() => {

playerRef.current.pause();

}}

>

Pause

</button>

</div>

</div>

<div className="thumbnails-wrapper">

Thumbnails

<div className="thumbnails">

{video.annotations.faceDetectionAnnotations.map(

(annotation, annotationIndex) => {

return (

<div

className="thumbnail"

key={`annotation${annotationIndex}`}

>

<Image

className="thumbnail-image"

src={`data:image/jpg;base64,${annotation.thumbnail}`}

alt="Thumbnail"

layout="fill"

></Image>

</div>

);

}

)}

</div>

</div>

</div>

) : (

<div className="no-videos">

No video yet. Get started by clicking on upload above

</div>

)}

</div>

</main>

</div>,

<style key="style tag" jsx="true">{`

* {

box-sizing: border-box;

}

header {

height: 100px;

background-color: purple;

display: flex;

justify-content: center;

align-items: center;

}

header h1 {

padding: 0;

margin: 0;

color: white;

}

.container {

min-height: 100vh;

background-color: white;

}

.container .wrapper {

max-width: 1000px;

margin: 0 auto;

}

.container .wrapper .actions {

display: flex;

justify-content: center;

align-items: center;

}

.container .wrapper .actions button {

margin: 10px;

padding: 20px 40px;

width: 80%;

font-weight: bold;

border: none;

border-radius: 2px;

}

.container .wrapper .actions button:hover {

background-color: purple;

color: white;

}

.container .wrapper .video-wrapper {

display: flex;

flex-flow: column;

}

.container .wrapper .video-wrapper .video-container {

position: relative;

width: 100%;

height: 500px;

background: red;

}

.container .wrapper .video-wrapper .video-container video {

position: absolute;

object-fit: cover;

}

.container .wrapper .video-wrapper .video-container canvas {

position: absolute;

z-index: 1;

}

.container .wrapper .video-wrapper .video-container .controls {

left: 0;

bottom: 0;

position: absolute;

z-index: 1;

background-color: #ffffff5b;

width: 100%;

height: 40px;

display: flex;

justify-items: center;

align-items: center;

}

.container .wrapper .video-wrapper .video-container .controls button {

margin: 0 5px;

}

.container .wrapper .video-wrapper .thumbnails-wrapper {

}

.container .wrapper .video-wrapper .thumbnails-wrapper .thumbnails {

display: flex;

flex-flow: row wrap;

}

.container

.wrapper

.video-wrapper

.thumbnails-wrapper

.thumbnails

.thumbnail {

position: relative;

flex: 0 0 20%;

height: 200px;

border: solid;

}

.container

.wrapper

.video-wrapper

.thumbnails-wrapper

.thumbnails

.thumbnail

.thumbnail-image {

width: 100%;

height: 100%;

}

.container .wrapper .no-videos,

.container .wrapper .loading {

display: flex;

justify-content: center;

align-items: center;

}

`}</style>,

];

}

Code language: PHP (php)At this point, you’re ready to run your application. Open your terminal and run the following at the root of your project.

npm run dev

At the moment, major technology companies, such as Apple, are very interested in and adopting facial recognition technology. AI startups are becoming unicorns as well. Without a doubt, facial recognition will play an increasingly important role in society in the coming years. Regardless of privacy concerns, facial recognition makes our streets, homes, banks, and shops safer—and more efficient.