Generation loss, a term originally rooted in the world of analog media, refers to the quality degradation that occurs when audio or video is reproduced. In the context of analog tape and audio transfers, each copy of an original source is a new generation, and with each transfer, the quality inevitably declines. This degradation in analog media is due to factors like signal loss, physical abrasion, and electronic noise, characteristics distinct from digital generation loss.

Interestingly, this concept of generational decline, though historically associated with analog formats, has parallels in the digital realm, particularly in digital imaging.

If you make a copy of a copy of a copy, the quality will deteriorate with every ‘generation’. This problem is called ‘generation loss’. It is not difficult to understand why this happens with actual copier machines. Scanning and printing are not perfect, being based on noisy sensors and physical paper and ink, and the resulting noise will tend to accumulate.

Digital images should theoretically not have this problem: a file can be copied over and over again, and it will still be bit-for-bit identical to the original.

However, lossy image formats like JPEG can behave like photocopiers. If you simply copy a JPEG file, nothing changes, but if you open a JPEG file in an image editor and then save it, you will get a different JPEG file. The same happens each time an image is uploaded to, say, Facebook or Twitter: the image is re-encoded automatically (in the case of these two social networks, with a relatively low quality setting, to save on storage and bandwidth). Some information is lost in the process, and compression artifacts will start to accumulate. If you do this often enough, eventually the image will degrade significantly.

In this article we’ll take a deeper look at the generation loss of JPEG and other lossy image formats. We’ll explain what causes it, and how it can be avoided.

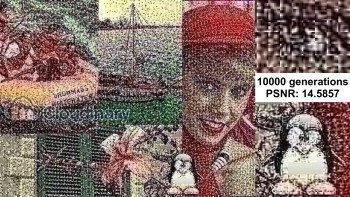

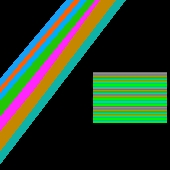

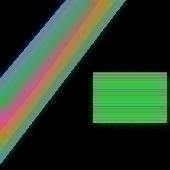

This is what happens if you re-encode a JPEG image many times:

JPEG has a ‘quality’ setting that allows you to make a trade-off between compression and visual quality. Lower quality settings will give you smaller files, at the price of discarding more image information. Higher quality settings result in larger files, but more information is retained so the resulting image is closer to the original.

So if you just save the JPEG at a high enough quality setting, there won’t be a problem, right?

Sadly, no. Obviously, information that is already lost cannot be magically recovered. So if you take a JPEG image that was saved with a quality of, say, 70, then re-saving it with a quality of 90 will, of course, not make the image look any better. But here’s the problem: it will even be worse. Every additional JPEG encoding will introduce additional loss, even if it is done at a higher quality setting than the original JPEG.

To understand why JPEG works this way, we have to dig a little deeper into how JPEG actually works. The JPEG format uses several mechanisms to reduce the file size of an image, some of which don’t accumulate while others do.

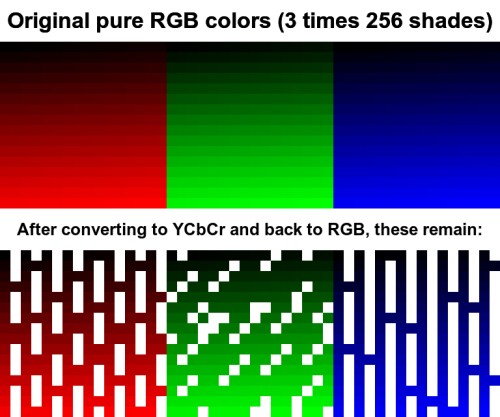

First of all, JPEG uses a color space transformation. Digital images are typically represented as pixels containing three separate 8-bit RGB values (red, green, blue). That is how computer screens display their pixels: each of the three color channels can have 256 different levels of intensity, resulting in 16.7 million possible colors (256*256*256) if you consider all the different combinations of red, green and blue.

These three RGB color channels are statistically correlated in most images: for example, in a grayscale image, the three channels are completely identical. So if image compression is the goal, RGB is not the best representation. Instead, JPEG uses the YCbCr color space. The Y channel is called ‘luma’ (the intensity of the light, i.e. the grayscale image), the two other channels, Cb and Cr, are called ‘chroma’ (the color components).

Besides decorrelating the pixel information, this color transformation has another advantage: the human eye is more sensitive to luma than it is to chroma, so in lossy compression, you can get away with more loss in the chroma channels than in the luma channel.

This color space transformation itself already introduces some loss, due to rounding errors and limited precision. If you transform an image containing all 16.7 million different colors from RGB to YCbCr and back, and then count the number of different colors, you’ll end up with only about 4 million different colors. Most of the loss is in the red and blue channels.

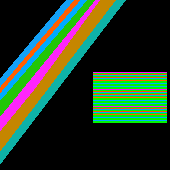

The YCbCr color transform by itself does not result in generation loss. It’s a relatively small, one-time loss in color precision, but it does not accumulate. JPEG does something else too though: it does so-called ‘chroma subsampling’, often referred to with the somewhat cryptic notation 4:2:0 (chroma subsampling is optional, but typically done by default). This means that only the Y channel is encoded at full resolution; for the two chroma channels Cb and Cr, the image resolution is cut in half both horizontally and vertically. In that way, instead of having chroma channels that take up two thirds of the information, they are reduced to one third of the total.

Chroma subsampling does contribute to generation loss and can lead to so-called `color bleeding’ or ‘color drifting’. Effectively, the chroma channels become increasingly blurry with each iteration of subsampling/upsampling. For example, this is what happens if you take an image and save it with a JPEG quality of 100 with 4:2:0 chroma subsampling:

This is one of the factors that can lead to generation loss. But we haven’t discussed the real loss introduced by JPEG yet…

The details of how JPEG compression actually works – the Discrete Cosine Transform (DCT) in 8×8 blocks – are a bit tricky to understand (if you’re interested: the Wikipedia article on JPEG is a good starting point). But the thing that is relevant for generation loss can be understood without needing to dig that deep. The core of JPEG compression is quantization, which is a very simple yet effective mechanism.

How does it work? Suppose you want to compress some sequence of numbers – it doesn’t really matter whether these numbers represent pixel values, DCT coefficients or something else. The amount of space you need to encode these numbers depends on how large the numbers are: for smaller numbers, less bits are needed.

So how can you make those numbers smaller? The answer is simple: just divide them by some number (this number is called a ‘quantization constant’) in the encoder, and then multiply it again by that same number in the decoder. The larger this quantization constant, the smaller the encoded values will become, but also the more lossy the whole operation becomes, since we’re rounding everything to integers here (otherwise the numbers wouldn’t really become smaller).

For example, suppose you want to encode the following sequence of numbers:

|

Original: |

50 |

100 |

150 |

200 |

250 |

300 |

If you use a quantization constant of 50, you can greatly reduce these numbers and still get back to the original sequence:

|

Encode: |

1 |

2 |

3 |

4 |

5 |

6 |

times 50 |

|

Decode: |

50 |

100 |

150 |

200 |

250 |

300 |

Most of the time, we will not be that lucky and we will get some loss, but the resulting values are still ‘close enough’. For example, if we use the quantization constant 100, we get even smaller numbers, at the cost of some loss:

|

Encode: |

0 |

1 |

1 |

2 |

2 |

3 |

times 100 |

|

Decode: |

0 |

100 |

100 |

200 |

200 |

300 |

As you can see, the ‘smooth’ sequence of numbers now became a not-so-smooth sequence of numbers. It’s this kind of quantization effect that leads to so-called `color banding’.

The higher the quantization constant, the lower the image quality and the smaller the JPEG file. Quality 100 corresponds to the quantization constant 1, in other words, no quantization at all. For the sake of this example, suppose a JPEG quality of 70 corresponds to the quantization constant 60, while a JPEG quality of 80 corresponds to the quantization constant 35. Clearly, the quality 80 image will be closer to the original than the quality 70 image:

|

Quality 80 encode: |

1 |

3 |

4 |

6 |

7 |

9 |

times 35 |

|

Quality 80 decode: |

35 |

105 |

140 |

210 |

245 |

315 |

|

|

Original: |

50 |

100 |

150 |

200 |

250 |

300 |

|

|

Quality 80 error: |

-15 |

5 |

-10 |

10 |

-5 |

15 |

|

Quality 70 encode: |

1 |

2 |

2 |

3 |

4 |

5 |

times 60 |

|

Quality 70 decode: |

60 |

120 |

120 |

180 |

240 |

300 |

|

|

Original: |

50 |

100 |

150 |

200 |

250 |

300 |

|

|

Quality 70 error: |

10 |

20 |

-30 |

-20 |

-10 |

0 |

In this case, the quality 80 sequence has a maximum error of 15 (and in general it cannot have a larger error than half of the quantization constant, so 17), while the quality 70 sequence has a maximum error of 30.

But what happens if you take the quality 70 image, and re-encode it at quality 80? Now the ‘original’ is no longer the original sequence, but the decoded quality 70 image is:

|

Quality 70 decode: |

60 |

120 |

120 |

180 |

240 |

300 |

|

|

Quality 80 encode: |

2 |

3 |

3 |

5 |

7 |

9 |

times 35 |

|

Quality 80 decode: |

70 |

105 |

105 |

175 |

245 |

315 |

|

|

Original: |

50 |

100 |

150 |

200 |

250 |

300 |

|

|

Quality 80 error: |

10 |

-15 |

-15 |

-5 |

5 |

15 |

|

|

Total error w.r.t. original: |

20 |

5 |

-45 |

-25 |

-5 |

15 |

If we are lucky, like on the second value in the sequence, the error of the first generation encoding (+20) is partially compensated by the error of the second generation encoding (-15) and the result is actually closer to the original again. But that’s just luck. The errors can just as well add up. In this example, the maximum error is now 45, which is larger than the maximum error from either quantization by itself.

This explains why re-saving a JPEG file at a higher quality setting than the original is always a bad idea: you’ll get a larger file, with more loss than if you would re-save it at the exact same quality setting.

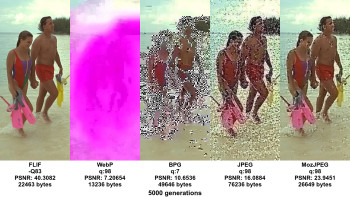

The JPEG file format is about 25 years old now, so maybe that’s why it suffers from this problem of generation loss. Surely more modern image formats like WebP (released in 2010) or BPG (released in 2014) are better in this respect, right?

Sadly, no again. In fact, WebP and BPG suffer even more from generation loss than JPEG. It’s not really a practical issue at this point, since the formats are not commonly used by end-users and most WebP or BPG images you’ll find on the internet will be ‘first generation’. But this could very well become a bigger problem should those formats become more popular.

Compared to WebP and BPG, JPEG is relatively robust in terms of generation loss. One of the reasons for this is that JPEG does everything in 8×8 blocks of pixels (or 16×16 if you take chroma subsampling into account), and most of the error accumulation cannot cross the boundaries of these macroblocks. The more modern formats WebP and BPG use variable-sized, larger macroblocks, which is good for compression, but it also means that an error in one part of the image can more easily propagate to other parts of the image.

This does not at all mean that WebP and BPG are bad image formats. They are actually great image formats. It just means that you have to be somewhat careful in how you use them.

FLIF is a lossless image format that outperforms other lossless image formats. FLIF also has a lossy encoder which modifies the image so that the lossless compression works better on it. It is much less sensitive to generation loss because the format itself is lossless – the color space is not YCbCr but YCoCg, which does not introduce loss, and there is no chroma subsampling, nor transformation to DCT which introduces rounding errors – and also because of the way it adds loss. Instead of using quantization, it rounds small values to zero and discards a number of bits. This works because the values it encodes are differences (between predicted pixel values and actual pixel values), not absolute values (of DCT coefficients). For example, to go back to the example that illustrated how quantization loss can accumulate:

|

Original: |

50 |

100 |

150 |

200 |

250 |

300 |

|

|

FLIF high quality |

48 |

96 |

144 |

200 |

248 |

296 |

3 bits discarded, values < 20 become 0 |

|

FLIF low quality |

0 |

96 |

160 |

192 |

256 |

288 |

5 bits discarded, values < 60 become 0 |

|

FLIF high quality after low quality: nothing changes |

FLIF low quality after high quality: same as low quality directly |

||||||

However, even lossy FLIF is not immune to generation loss if you significantly modify the image between generations, for example by doing a rotation or resizing.

There are only two ways to avoid generation loss: either don’t use a lossy format, or keep the number of generations as close as possible to 1. The generation count has a larger impact on the image quality than the actual quality settings you use. For example, if you save an image first with a JPEG quality of 85 and then re-save it with a quality of 90, the result will actually be more lossy than if you saved it only once with a quality of 80.

Avoiding lossy formats is a good idea if possible. When editing images, it is best to store the original and intermediate images using lossless image formats like PNG, TIFF, FLIF, or native image editor formats like PSD or XCF. Only when you’re done should the final image be saved using a lossy format like JPEG to reduce the file size. If you later change your mind and want to do some further editing, you can go back to the lossless originals and start from there.

In some cases this is not an option though. If you find an image on the internet that you want to reuse and edit (say to create a very funny meme), chances are the image is a JPEG file, and the original cannot be found. In this case, one thing you can do is track down the image using Google Image Search, and try to find the earliest generation, i.e. the oldest and highest resolution version of the image.

In particular, if you encounter images on social media websites like Facebook or Twitter, take into account that these websites automatically transcode uploaded images, so generation loss can easily accumulate if an image goes viral and it jumps frequently between those different websites. For this reason, using “Share” or “Retweet” is better than downloading the image and then re-uploading it.

Finally, if you really need to edit a JPEG file, there are some ways to avoid or minimize generation loss, depending on what kind of edits you want to make:

- If all you need to do is cropping or rotation by 90 degrees: this can actually be done without fully re-encoding the JPEG file, so without any generation loss.

- If you do actual editing of some (but not all) of the pixels, you should of course work with lossless files in your image editor, but to save the final image, it’s probably best to use the exact same quality settings as the original JPEG file (as we explained above, this introduces less generation loss than if you use a higher quality setting).

- If your editing is changing all or most of the pixels – e.g. you’re scaling down, rotating by an arbitrary angle, applying some kind of global filter or effect – then the original quality setting doesn’t really matter anymore, so using a high(er) quality setting certainly makes sense.

If you’re using Cloudinary, I would recommend always uploading the highest resolution, highest quality original image you have available (lossless if possible), especially if you’re using automatic format selection (f_auto). Cloudinary always keeps your original image as is (adding zero generation loss), and each derived image is encoded directly from the original (adding one generation, which is inevitable). That way you ensure that your image assets are future-proof: when in the future, higher image qualities and/or resolutions are required or desired, or new image formats become available, it will be an effortless change.