Recently, the AVIF team at Google provided a comprehensive comparison of the performance of AVIF, JPEG XL, WebP, and JPEG. Comparing lossy image codecs is not easy, and there are many aspects to take into account. One key point to note in the results they present, is that at the ‘useful’ presets — encoding speeds JPEG XL s6 and AVIF s7, not too slow but still bringing significant gains over previous generation codecs — 9 out of the 13 quality metrics measured favor JPEG XL.

I very much welcome the AVIF team’s efforts to bring more data to the discussion. In this blog post, I want to contribute by providing some additional data, along with suggestions on how to interpret the data that is already made available. For example, on the decoding side, although JPEG XL is currently slightly slower than AVIF, it’s important to look at the quality of experience in addition to the decoding speed. Since JPEG XL images start to decode while downloading and better yet, render progressively, the user gets to see a JPEG XL image much sooner than the AVIF one.

Let’s dive in.

The comparison starts with an in-browser measurement of decode speed. This is indeed an important aspect of image delivery on the web. The test was done on Chrome version 92, while in the meantime, there have been improvements in the JPEG XL implementation. Using the most recent Chrome version, we do obtain the following results:

The above speeds were measured with the most recent developer version of Chrome on a 2019 Macbook Pro (2.6 GHz 6-Core Intel Core i7, 12 MB L3 cache, 32 GB memory) using this test page. JPEG XL images can optionally be encoded with the – -faster-decoding=3 option (indicated with fd3 in the chart), which produces files that decode (somewhat) faster. Also, JPEG XL files created by losslessly recompressing JPEG images decode faster, since they don’t use all the advanced features of JPEG XL. AVIF images can be encoded in 8-bit (SDR) or higher bit depths (for HDR). Overall it looks like the decode speed of JPEG XL and AVIF is roughly the same, with AVIF being slightly faster for 8-bit images.

For the user experience, what matters is not the decode speed as such, but the entire rendering flow, which includes downloading, decoding, and rendering. One important thing to note is that image decoding in a browser can happen in a streaming (incremental) way: while the image data is arriving, the decoder can already start to decode the image. This is how progressive decoding works. However, the way the decode time measurement was done in this test, was different from how images are normally loaded in a browser: first the entire image file was fetched, then it was fully decoded. This is representative of the time it would take to load a locally cached image, but it is not representative of the user experience when loading an image over the network. In practice, with a streaming decoder, much of the decode time overlaps with the transfer time, making the total transfer + decode time shorter than the sum of both times seen in isolation.

The current AVIF decoders however expect a full compressed image frame to be available before they can start decoding. This is because for typical video playback on the web, streaming decoding of a single frame is not very useful — browsers will typically buffer many frames-worth of compressed data before starting playback anyway. This means that for AVIF, at least currently, the time to load an image is equal to the transfer time plus the decode time. Schematically:

This situation could change in the future, if and when a streaming AVIF decoder gets implemented in browsers. It is not a straightforward change though, as it would require substantial changes in the underlying av1 decoding library.

Moreover, in terms of the perceived loading speed, what is maybe more relevant than the time to get the full image, is the time it takes to get the first preview of the image on the screen. Progressive JPEG as well as JPEG XL have a significant advantage over other formats in this respect, since they can show a blurry preview version of the image when only part of the image data is available. This is indicated in the diagram above with a purple line, while the render moment of the final (full detail) version of the image is indicated with a red line.

Using Chrome’s devtools, you can simulate how a page would load under various network conditions. On this simple example page, there are two pictures of my cat, one as an AVIF image on the left, the other as a JPEG XL image on the right. Quality settings were adjusted so the files have roughly the same size (the AVIF file is even slightly smaller), yet when loaded over a simulated mobile 3G network, the JPEG XL appears first:

In other words, decode time by itself is not the only thing that matters: what matters too is how well the decoder works in combination with streaming — decoding the image while it is being transferred — and what features the codec has in terms of progressive rendering.

Both decoding speed and encoding speed are mostly a property of a specific implementation, and they are only to some extent inherent to the image format itself. In the case of AVIF, a lot of effort has already gone into optimizing the dav1d decoder implementation, while arguably there is somewhat more room for improvement left in the libjxl decoder implementation. For example, while dav1d and libavif have specialized faster code paths to decode 8-bit files (as opposed to 10-bit or 12-bit), libjxl currently mostly uses the same high-precision (up to 32-bit) code path for all input files.

The chart presented on the main page may give the impression that AVIF encoding is faster than anything else, which, given AVIF’s reputation as having slow encoding times, might be surprising. I believe these surprising results can be explained partially, but not fully, by recent improvements in libaom’s encoding speed. Let’s take a closer look.

The chart shows speeds for encoding with 8 threads. One difference between the libaom AVIF encoder and the libjxl JPEG XL encoder, is that libaom produces different (worse) results when using more threads while libjxl produces the same output file regardless of the number of threads used. According to the numbers on the more detailed pages, at AVIF speed 7, the single-threaded compression performance is about 3% better than when using 8 threads (e.g. according to the DSSIM BD rate, single-threaded AVIF s7 is 4.13% worse than JPEG XL s6, while 8-threaded AVIF s7 is 7.67% worse), while 8-threaded encoding is about 3 times as fast. This may be an acceptable trade-off, but it has to be taken into account that the number of threads does have an impact on compression performance — when faster AVIF encoding is desired, it might be more effective to use a faster speed setting than to use more threads. The libjxl encoder, by contrast, does not present any such trade-off: increasing the number of threads makes it go faster without any cost in compression performance.

In many use cases, encode cost is more important than encode speed. Since encoders generally don’t parallelize perfectly (neither libaom nor libjxl do), overall throughput is typically most efficient when encoding multiple images in parallel, using a single thread for each of them.

AVIF encoding at speed 9 with 8 threads is fast, but the question is how useful it is to encode images like that. It does not look like this encode setting produces results that are better than mozjpeg, at least not in the range of qualities that is relevant for the web (more about that later). It may be a useful speed setting for live video streaming, but for still images on the web, it does not seem to be a very good choice. The fastest libjxl setting would also likely not be the best choice for web images, but at least it does still provide a consistent 15% compression advantage compared to mozjpeg — comparable to the compression gain of default speed AVIF over mozjpeg.

Looking at encode speed in isolation, without considering compression performance, is not very meaningful. On the detailed pages per test set, the metric results are compared at similar speed settings, which is indeed a good way to compare things. What is not so clear from the way the results are presented on the main page, is that the range of encode speeds and compression results is significantly larger for AVIF than for JPEG XL. JPEG XL at default speed is already almost as good as at its slowest setting, while slower AVIF settings do bring substantial additional compression gains (but also substantial additional encode time).

Encoding speed with libaom — and with many lossy image encoders — depends on the quality setting: typically things get slower as quality increases. The libjxl encoder is a notable exception to this. For example, to encode a large image (using a single thread), I get the following encode times (in seconds) at different quality settings:

| quality | mozjpeg | time | cjxl e6 | time | avif s6 | time | cwebp m4 | time |

| lowest | q1 | 0.4 | d15 | 1.9 | cq63 | 1.2 | q1 | 0.7 |

| normal | q75 | 0.9 | d2 | 1.9 | cq26 | 4.2 | q75 | 0.9 |

| highest | q100 | 6.5 | d0.1 | 2.3 | cq1 | 5.5 | q100 | 1.3 |

Notice how at the very lowest quality, the AVIF encoder is four times faster than at more reasonable qualities. To illustrate what this quality setting means: this is an AVIF image encoded at the lowest quality setting:

In practice, of course, such extremely low-quality settings are not used. Such extreme artifacts are just not acceptable, not even if you really, really, want to sacrifice fidelity to get maximum compression.

When comparing encoding speed at typical quality settings, AVIF encoding is slower than most other codecs. In the comparison that was done however, the speeds were averaged over a large range of quality settings: this includes some settings that are higher quality than what is relevant for the web, but it mostly includes a lot of settings that are lower quality than what is relevant for most use cases. This has the effect of making most encoders — all except the JPEG XL one — look faster than they are in practice, in particular the AVIF encoder.

Assessing quality of lossy image compression is not easy. Objective metrics are useful, but it has to be kept in mind that no metric correlates perfectly with human perception. Subjective experiments take time and require human test subjects though, so it is not feasible to perform such experiments to evaluate every new encoder version and all possible encoder settings. When encoders are still rapidly evolving (as is the case for both AVIF and JPEG XL), objective metrics are particularly useful. Some metrics are more useful than others though to estimate perceptual quality, and we can take that into account when interpreting the results.

Recently we performed a large-scale subjective quality assessment experiment where we collected 1.4 million opinions on compressed images, including JPEG, [WebP](https://cloudinary.com/tools/compress-webp), AVIF, and JPEG XL images. The mean opinion scores we obtained can be compared to the various metrics, and correlation coefficients can be computed. These are the numbers we obtained, sorted from lowest to highest correlation:

| Metric | Kendall correlation | Spearman correlation | Pearson correlation |

| CIEDE2000 | 0.3184 | 0.4625 | 0.4159 |

| PSNR | 0.3472 | 0.5002 | 0.4817 |

| SSIM | 0.4197 | 0.5941 | 0.5300 |

| SSIMULACRA | -0.5255 | -0.7175 | -0.6940 |

| MS-SSIM | 0.5557 | 0.7514 | 0.7005 |

| Butteraugli | -0.5843 | -0.7738 | -0.7074 |

| PSNR-HVS | 0.6059 | 0.8087 | 0.7541 |

| VMAF | 0.6176 | 0.8164 | 0.7799 |

| DSSIM | -0.6428 | -0.8399 | -0.7813 |

| Butteraugli 3-norm | -0.6547 | -0.8387 | -0.7903 |

| SSIMULACRA 2 | 0.6934 | 0.8820 | 0.8601 |

On the main comparison page, results are given for MS-SSIM, but as the table above indicates, this is not a metric that correlates particularly well with mean opinion scores. Additionally, the AVIF encoding is done with the tune=ssim option, which causes the encoder to optimize for SSIM. Such optimization does lead to better scores in SSIM-derived metrics (like MS-SSIM), but to some extent the effect is that these metrics get ‘fooled’ and assign higher scores than humans would.

In other words, MS-SSIM likely overestimates the quality of AVIF images compared to other codecs. Similarly, Butteraugli likely overestimates the quality of JPEG XL, PSNR-HVS likely overestimates the quality of mozjpeg, and PSNR likely overestimates the quality of WebP, since those metrics are what the corresponding encoders are tuned for. Out of the 13 metrics that were computed, DSSIM and SSIMULACRA 2 are likely to provide the best insight: they correlate well with MOS scores and none of the encoders is specifically optimizing for them.

The comparison results are summarized using the Bjøntegaard Delta (BD) rate, which is a way to summarize the relative compression performance of two encoders. However, there is a similar problem as with the encoding speed numbers: the very low end of the quality spectrum is overrepresented, causing the aggregated result to mostly reflect what happens at the range of qualities that is not used in practice.

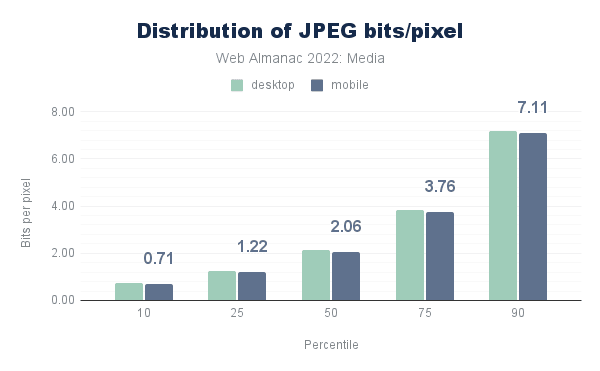

AVIF images on the web are currently typically compressed to around 1 bit per pixel (bpp), according to the Web Almanac data, and JPEG images typically to around 2 bpp:

The range that was used in the BD rate computation was 0.1 bpp to 3 bpp. The range of metric scores reached by both codecs in this range is what is considered for the BD rate computation. For most metrics, the curves tend to be very steep at the very low bitrates, to then flatten out at the higher bitrates. This means that the low bitrates have the largest effect on the BD rate. That can lead to incorrect conclusions. For example, if you look at this plot:

At JPEG bitrates above 1.22 bpp (which covers about 75% of the JPEG images on the web), the advantage of AVIF (or at least this AVIF encode setting) over libjpeg-turbo seems to be less than 25%. The gain gets smaller at higher bitrates. However, according to the BD rate, AVIF is about 50% smaller — except this is only true at qualities too low to be useful in the first place.

A similar problem occurs when using BD rates over such a range of bitrates when comparing JPEG XL to AVIF. Take for example this plot from the subset1 comparison page:

In ‘The case for JPEG XL’, I estimated that in the quality range relevant for the web, JPEG XL can obtain 10 to 15% better compression than AVIF. Knowing that the median AVIF image on the web is around 1 bpp, the above plot does seem to confirm this — the gain shown here at a SSIMULACRA 2 score of 70 is even 17%. But by starting the range at a very low quality, where AVIF does perform better than JPEG XL (8% better at a SSIMULACRA 2 score of 35), the overall BD rate improvement for JPEG XL is only an underwhelming 3%.

For images on the web, the typical quality to aim for would be a SSIMULACRA 2 score between 60 and 85, depending on the fidelity versus bandwidth trade-off desired for the specific website. Expressed in libjpeg-turbo quality settings, this is roughly the range between q40 and q90. In this range, JPEG XL performs very well. But in the computation of BD rates, the performance in this range gets only half the weight of the performance in the range of SSIMULACRA2 scores between 10 and 60.

In short, AVIF performs well at qualities that are too low to be useful in practice. Including these very-low-quality ranges skews the aggregate results in AVIF’s favor. A more useful comparison would focus on useful quality ranges.

Looking at the overall impact on bandwidth and storage consumption, getting improvements in the high-fidelity range will have a larger impact than the same percent-wise improvement in the low-fidelity range. The reason is simple: high-fidelity images have larger file sizes to begin with. Assuming that website creators don’t want to change the fidelity of their images, the impact of improving compression by 20% at the low-fidelity end, where they might now be using 0.7 bpp JPEGs, is significantly smaller than the impact of improving compression by 20% at the high-fidelity end, where they might now be using 5 bpp JPEGs. Simply put: shaving off 8 kb from a low-quality 40 kb image is the same percent-wise improvement as shaving off 60 kb from a high-quality 300 kb image, but not the same saving in absolute terms.

Three test sets were used in the comparison: subset1, noto-emoji and Kodak. The first of these is a good set of images for testing: it is the same one as on this visual comparison page. These images are web-sized downscaled versions of images taken from Wikipedia pages. The Kodak image set is probably not as good — this is a set of scanned analog photos from 1991, which may not be that relevant for testing image compression, now that digital cameras have become the norm. The noto-emoji set seems like an odd choice as a test set for image compression: the images are rasterized vector graphics, and the best practice would be to preserve them as vector graphics rather than rasterizing them and applying lossy compression. AVIF does perform very well on this set, but it seems questionable to replace emoji fonts with lossy raster images in the first place.

In the comparison for lossless compression, only the slowest encode settings are shown. These settings are likely not so useful in practice — 20 seconds of encode time per megapixel is not exactly a fun user experience. It would be interesting to compare performance at various speed settings and to also include results for fjxl, the fast lossless JPEG XL encoder that can encode 100 megapixels in the blink of an eye, as well as various PNG encoders and optimizers. It would also be interesting to see what happens at higher bit depths (e.g. 10-bit, 12-bit, 14-bit, and 16-bit).

Lossless compression is generally more useful for image authoring workflows than for web delivery. There are however cases where lossless compression can be more effective than lossy compression: pixel art, screenshots, plots, diagrams, and illustrations or drawings with few colors can often be encoded to smaller files using lossless compression than using lossy compression. It would be interesting to compare lossless compression specifically on a set of images where it would make sense to use lossless compression for web delivery. The Kodak test set is not a good example of this. A better example, where lossless compression does make sense on the web, would be the compression performance plots available on the detailed comparison pages. In lossless compression, there’s always a trade-off between encode time and compression. A specific encoder setting is Pareto optimal if there is no alternative that compresses both faster and better. For this image, encoding it with various lossless encoder settings results in the following file sizes and encode times:

| Encoder | File size | Saving | Encode time (seconds) | MP/s |

| jxl e9 | 11740 | 61.03% | 10.166 | 0.19 |

| jxl e8 | 13888 | 53.90% | 1.307 | 1.47 |

| jxl e7 | 14324 | 52.45% | 0.775 | 2.48 |

| webp z9 | 15056 | 50.02% | 1.508 | 1.27 |

| webp z8 | 15788 | 47.60% | 0.237 | 8.10 |

| webp z7 | 15788 | 47.60% | 0.039 | 49.23 |

| webp z6 | 15934 | 47.11% | 0.038 | 50.53 |

| webp z3 | 16466 | 45.34% | 0.032 | 60.00 |

| webp z2 | 16510 | 45.20% | 0.027 | 71.11 |

| webp z1 | 20244 | 32.80% | 0.014 | 137.14 |

| optipng | 25577 | 15.10% | 0.409 | 4.69 |

| jxl e4 | 30050 | 0.26% | 0.3965 | 4.84 |

| original png | 30127 | 0.00% | ||

| avif s2 | 40454 | -34.28% | 23.348 | 0.08 |

| avif s0 | 40972 | -36.00% | 37.096 | 0.05 |

| avif s3 | 41144 | -36.57% | 17.809 | 0.11 |

| avif s4 | 41799 | -38.74% | 11.747 | 0.16 |

| fjxl e127 | 44743 | -48.51% | 0.004112 | 466.93 |

| fjxl e3 | 45537 | -51.15% | 0.002544 | 754.72 |

| avif s5 | 46523 | -54.42% | 7.656 | 0.25 |

| fjxl e2 | 47044 | -56.15% | 0.002554 | 751.76 |

| webp z0 | 47238 | -56.80% | 0.013 | 147.69 |

| avif s6 | 49248 | -63.47% | 3.346 | 0.57 |

| avif s7 | 54232 | -80.01% | 2.612 | 0.74 |

| fjxl e1 | 82171 | -172.75% | 0.002054 | 934.76 |

| avif s8 | 89557 | -197.26% | 0.27 | 7.11 |

| fjxl e0 | 119177 | -295.58% | 0.002078 | 923.97 |

| avif s9 | 401483 | -1232.64% | 0.164 | 11.71 |

Pareto optimal settings are indicated in bold in this table. For this particular image, the Pareto front consists of JPEG XL at both the best-compression and fastest-compression ends, and lossless WebP still offering good intermediate trade-offs. AVIF is clearly far away from the Pareto front for this image.

New image formats do not just bring improved compression performance, they also bring new functionality. What is missing in the current comparison is a discussion in terms of features supported by the various formats. This could be done in the form of a simple table. For some of the features, it could also be interesting to investigate compression performance effects: e.g., alpha channels and HDR images have different characteristics than regular SDR images, optional progressive decoding can have a positive or negative effect on compression, etc.

Such a table could look like this:

| Feature | JPEG | WebP | AVIF | JPEG XL |

|---|---|---|---|---|

| Alpha transparency | ❌ | ✅ | ✅ | ✅ |

| HDR | ❌ | ❌ | ✅ | ✅ |

| Depth maps | ❌ | ❌ | ✅ | ✅ |

| Lossy 4:4:4 (no chroma subsampling) | ✅ | ❌ | ✅ | ✅ |

| Effective lossless compression | ❌ | ✅ | ❌ | ✅ |

| Progressive decoding | ✅ | ❌ | ❌? | ✅ |

| Saliency progressive decoding | ❌ | ❌ | ❌ | ✅ |

| Lossless JPEG recompression | N/A | ❌ | ❌ | ✅ |

| Layers | ❌ | ❌ | ✅? | ✅ |

| Animation | ❌ | ✅ | ✅ | ✅ |

| Useful in authoring workflows | ❌ | ❌ | ❌ | ✅ |

Compression performance can always be improved in the future: there will likely always be room for better encoders and faster decoders for any codec. Features, on the other hand, are inherent to the format specification — which is basically immutable since changing it boils down to introducing a new format. This means that when making long-term decisions about adding a new format to the web platform, their feature set should definitely be taken into account.

The AVIF team provided a good starting point for doing a data-driven comparison of image formats that can help to clarify the desirability of adding new formats to the web platform. The compression results obtained by the AVIF team do confirm the results we obtained through subjective experiments and our own evaluations based on objective metrics. According to the subset1 results, the encoding speed of JPEG XL s6 is similar to that of AVIF s7 and at these settings, 9 out of the 13 metrics favor JPEG XL. Roughly speaking, at ‘web quality’, according to the best available metric, AVIF gives a compression gain of about 15% over mozjpeg (or more at the very low end of the quality spectrum), and JPEG XL gives a compression gain of about 15% over AVIF (except at the very low end of the quality spectrum). These are the results obtained by the AVIF team, and they do show the value of JPEG XL.

In this contribution, I hope to have made some constructive suggestions on how to further improve the comparison methodology, and on how to interpret the results in the most meaningful way. Through constructive dialogue, we can together make progress on our shared goals: making the web faster, improving the user experience, and promoting royalty-free codecs!