When you’re about to watch a YouTube video on a mobile device, at first, the video is on mute. Then you’d see a button at the top left corner for unmuting the video.

This article will walk you through implementing that feature using video events in Next.js.

The completed project is on CodeSandbox. Fork it and run the code.

https://github.com/folucode/click-to-unmute-nextjs-demo

To follow through with this article, we need to have:

- An understanding of JavaScript and Next.js.

- A Cloudinary Account. You can create a free one if you don’t already have one.

Node and its package manager npm are required to initialize a new project.

Using npm would require that we download the package to our computer before using it, but npm comes with an executor called npx.

npx stands for Node Package Execute. It allows us to execute any package we want from the npm registry without installing it.

To install Node, we go to the Nodejs website and follow the instructions. We verify Node.js’ installation using the terminal command below:

node -v

v16.10.0 //node version installed

The result shows the version of Node.js we installed on our computer.

We’ll create our Next.js app using create-next-app, which automatically sets up a boilerplate Next.js app. To create a new project, run:

npx create-next-app@latest <app-name>

# or

yarn create next-app <app-name>

After the installation is complete, change directory into the app we just created:

cd <app-name>

Now we run npm run dev or yarn dev to start the development server on http://localhost:3000.

Cloudinary provides a rich media management experience enabling users to upload, store, manage, manipulate, and deliver images and videos for websites and applications.

Due to the performance benefits of serving images from an optimized Content Delivery Network, we’ll use images stored on Cloudinary. We’ll utilize the Cloudinary-react package to render Cloudinary videos on a page. We Install the cloudinary-react package in the project using npm by running the following command in the project’s root directory:

npm i cloudinary-react

With installations completed, we’ll start the react application using the command below:

npm run dev

Once run, the command spins up a local development server which we can access on http://localhost:3000.

In the “index.js” file in the “pages” folder, we replace the boilerplate code with the code below which renders a Cloudinary video .

import { Video, CloudinaryContext } from 'cloudinary-react';

return (

<div className='App'>

<h1>Create youtube-like `click to unmute` feature in Next.js</h1>

<div className='video-area'>

<CloudinaryContext cloudName='chukwutosin'>

<Video

publicId='Dog_Barking'

controls

muted={true}

width='500px'

innerRef={videoRef}

autoPlay

/>

</CloudinaryContext>

</div>

</div>

);

}

In the code above, we first imported the Video component that renders the video onto the page and CloudinaryContext. This component takes props of any data we want to make available to its child Cloudinary components.

The cloudName prop is our Cloudinary cloud name which we get from our Cloudinary dashboard.

We use the Video component to render a 500px wide video player , having controls, and is muted on playback. We then pass a prop called publicId, which is the public ID of the video we want to render. A public ID is a unique identifier for a media asset stored on Cloudinary.

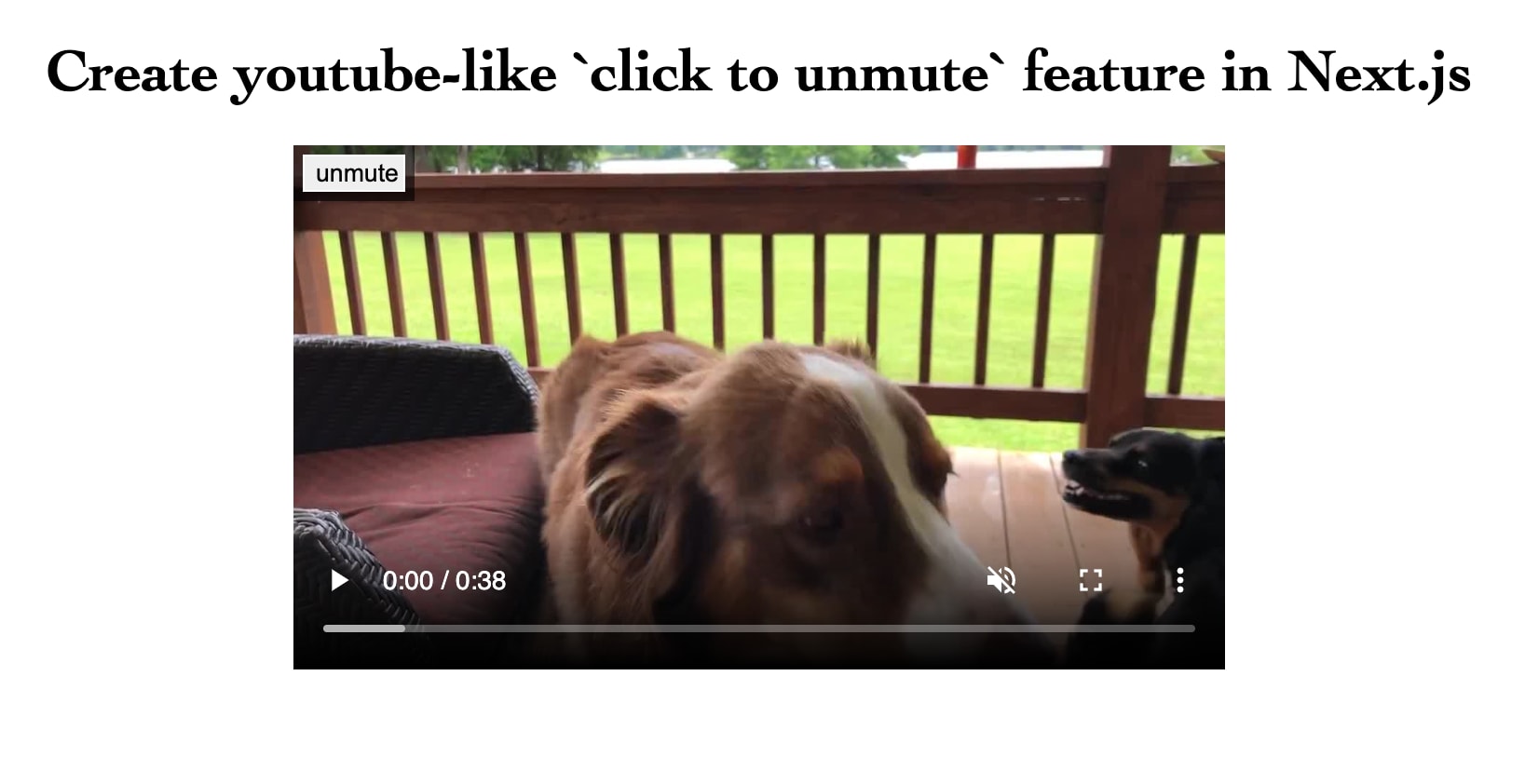

The rendered video should look like this:

We can seamlessly access the methods and properties of the video element using the innerRef prop provided by the cloudinary-react package.

By creating a reference using React’s useRef() hook and assigning it to the innerRef property, we can utilize the properties and methods of the underlying video element. Subsequently, all the video element’s available properties and methods will be on the .current property of the ref.

We create a ref and assign it with:

import { useRef } from 'react'; // change 1

import { Video, CloudinaryContext } from 'cloudinary-react';

export default function IndexPage() {

const videoRef = useRef(); // change 2

return (

<div className='App'>

<h1>Create youtube-like `click to unmute` feature in Next.js</h1>

<div className='video-area'>

<CloudinaryContext cloudName='chukwutosin'>

<Video

publicId='Dog_Barking'

controls

muted

width='500px'

innerRef={videoRef} // change 3

autoPlay

/>

</CloudinaryContext>

</div>

</div>

);

}

When the video playback starts, the video is on mute, and then once the unmute button is clicked, we trigger an event on the video.

Using HTML’s video events, we’ll change the state of the video player.

We create a function to handle the event change with:

import { Video, CloudinaryContext } from "cloudinary-react";

import { useRef } from "react";

export default function IndexPage() {

const videoRef = useRef();

const unmute = () => {

let video = videoRef.current;

video.muted = false;

};

return (

// render component here

);

}

First, we created a function name unmute to handle the change of event value on the video when a button is clicked.

We then get the current video with the .current property of the ref. Next, we update the muted event of the video to false.

We start by creating a regular HTML button element with a value of unmute and then add an onClick handler like so:

import { useRef } from 'react';

import { Video, CloudinaryContext } from 'cloudinary-react';

export default function IndexPage() {

...

return (

<div className='App'>

...

<div className='button-area'>

<button type='button' onClick={() => unmute()}>

unmute

</button>

</div>

...

);

}

The unmute function is put in an arrow function so it won’t be called immediately after the component renders.

We need to properly style our app to align the different elements, especially the unmute button. We want the button to sit on the video element in the top left corner.

We do this by deleting the boilerplate styles in the globals.css file in the styles folders and then adding the following styles:

.App {

font-family: Cambria, Cochin, Georgia, Times, "Times New Roman", serif;

text-align: center;

margin: 0;

padding: 0;

width: auto;

height: 100vh;

}

h3 {

color: rgb(46, 44, 44);

}

.video-area {

position: relative;

width: 500px;

margin: auto;

left: calc(50% — 200px);

}

.button-area {

left: 50%;

margin-left: -250px;

width: 55px;

height: 20px;

padding: 5px;

position: absolute;

z-index: 1;

background: rgba(0, 0, 0, 0.6);

}

button {

text-align: center;

border: solid 1px rgb(255, 255, 255);

width: 100%;

height: 20px;

}

The styles will be automatically added to our component by Next.js.

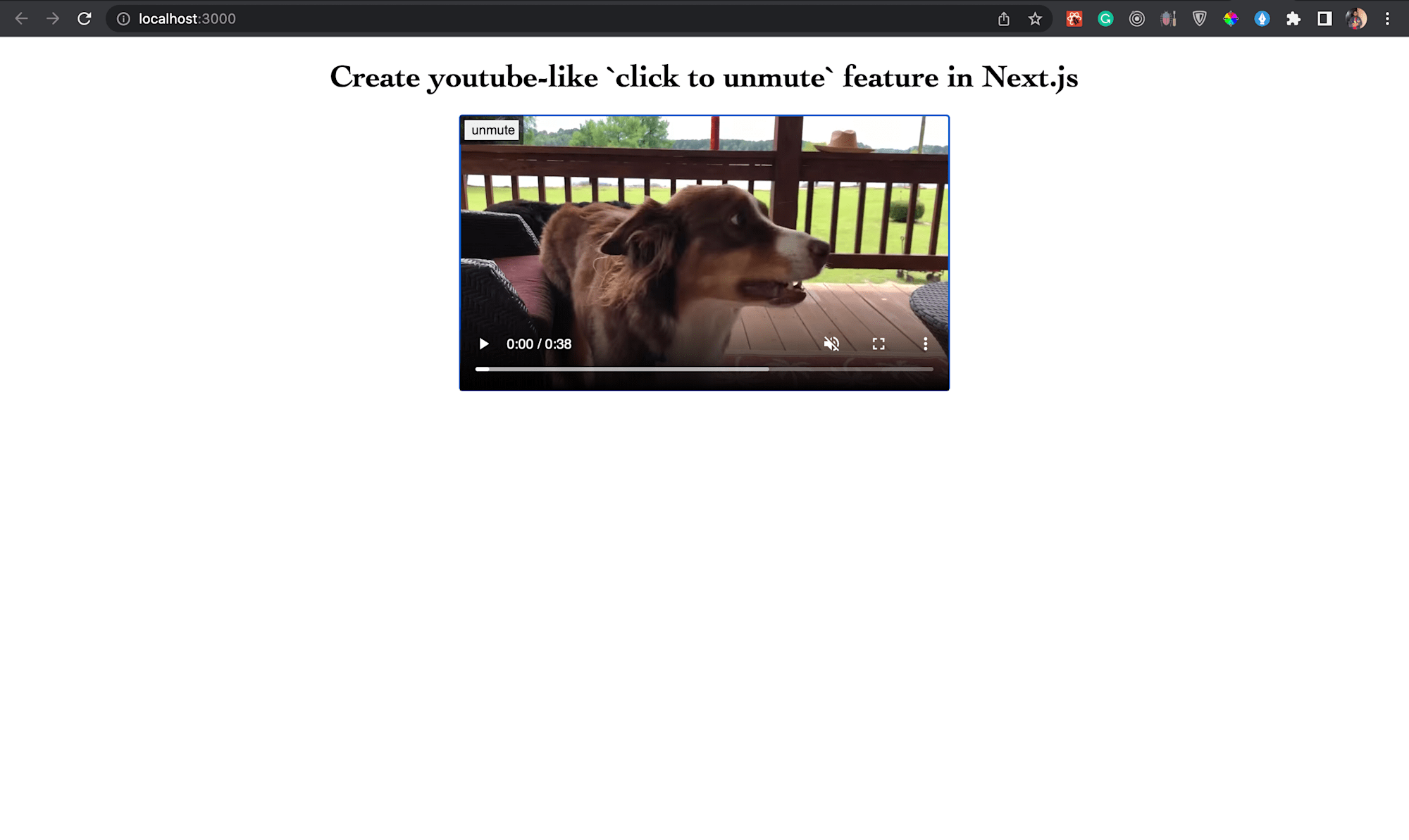

After completing that step, we should see our app look like this:

Now, once our video starts playing, it is muted and immediately the unmute button is clicked, the video is then unmuted.

This article addressed utilizing video events to create, mute, and unmute a video when it starts playing and when a button is clicked.

You may find these resources helpful.