Allowing your users to upload their own images to your website can increase user engagement, retention and monetization. However, allowing your users to upload any image they want to, may lead to some of your users uploading inappropriate images to your application. These images may offend other users or even cause your site to violate standards or regulations.

If you have any ads appearing on your site, you also need to protect your advertiser’s brands by ensuring that they don’t appear alongside any adult content. Some advertising networks are very intolerant and blacklist any website that displays adult content, even if that content was submitted by users.

Cloudinary’s image management solution already provides a manual moderation web interface and API to help do this efficiently. However, manual moderation is time consuming and is not instantaneous, and so we wanted to provide an additional option – automatic moderation of images as your users upload them.

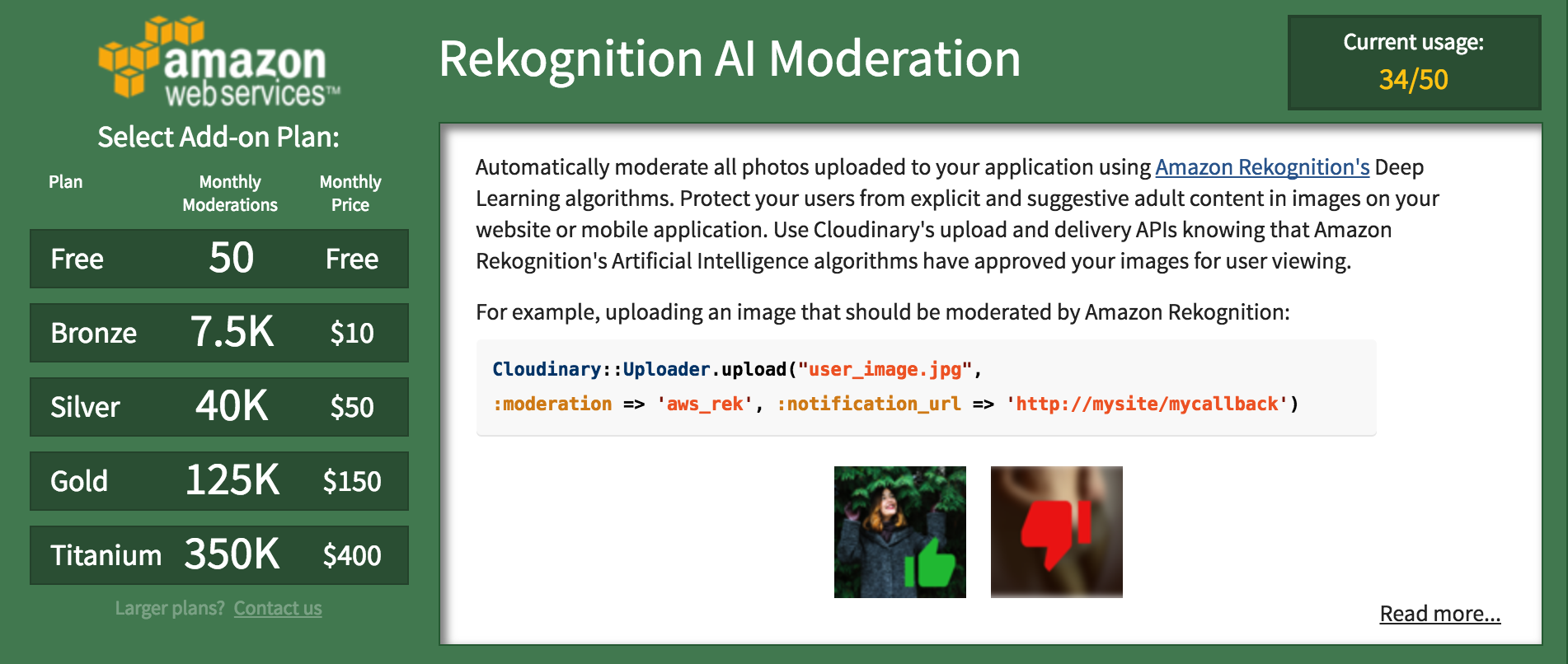

Cloudinary provides an add-on for Amazon Rekognition’s image moderation service based on deep learning algorithms, fully integrated into Cloudinary’s image management and manipulation pipeline. With Amazon Rekognition’s AI Moderation add-on, you can extend Cloudinary’s powerful cloud-based image media library and delivery capabilities with automatic, artificial intelligence-based moderation of your photos. Protect your users from explicit and suggestive adult content in your user-uploaded images, making sure that no offensive photos are displayed to your web and mobile viewers.

To request moderation while uploading an image, simply set the moderation upload API parameter to aws_rek:

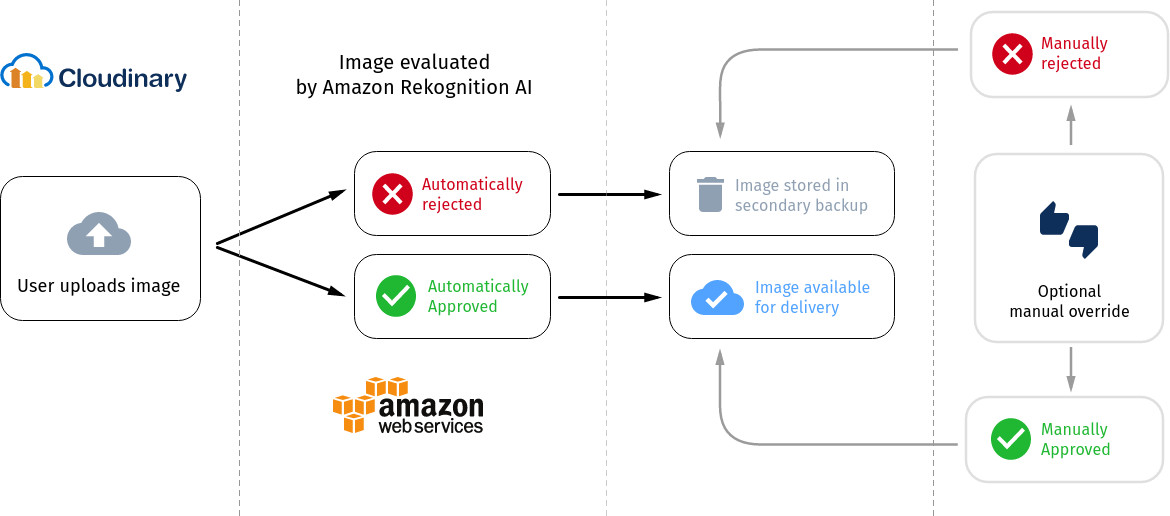

The uploaded image is automatically sent to Amazon Rekognition for moderation: Amazon Rekognition assigns a moderation confidence score (0 – 100) indicating the chances that an image belongs to an offensive content category. The default moderation confidence level is 0.5 unless specifically overridden (see below), and all images classified by Amazon Rekognition with a value greater than the moderation confidence level are classified as ‘rejected’. Otherwise, their status is set to ‘approved’, with all results included in the upload response.

This means that your user can be instantaneously alerted to the fact if their image was rejected, improving the end user experience (no waiting for approval or suddenly finding their image gone at a later date due to manual moderation). A rejected image is moved to a secondary backup repository, and is not automatically delivered. If you choose, you can manually review rejected images and mark them as approved if appropriate as described later in this article.

The automatic moderation can be configured for different content categories, allowing you to fine-tune what kinds of images you deem acceptable or objectionable. By default, any image that Amazon Rekognition determines to be adult content with a confidence score of over 50% is automatically rejected. This minimum confidence score can be overridden for any of the categories with a new default value (see the documentation for a breakdown of the available categories). For example, to request moderation for the supermodel image, with the moderation confidence level set to 0.75 for the ‘Female Swimwear or Underwear’ sub-category, 0.6 for the ‘Explicit Nudity’ top-level category (overriding the default for all its child categories as well) and exclude the ‘Revealing Clothes’ category:

No matter how powerful and reliable an artificial intelligence algorithm is, it can never be 100% accurate, and in some cases of moderation, “accuracy” might be suggestive. Furthermore, you may configure your moderation tool to err on the conservative side, which may result in an occasional rejected image that may have been OK.

While the automated moderation minimizes efforts and provides instantaneous results, you may sometimes want to adjust the moderation status of a specific image manually.

You can also use the API or web interface to alter the automatic moderation decision. You can browse rejected or approved images, and then manually approve or reject them as needed. If you choose to approve a previously rejected image, the original version of the rejected image will be restored from backup, and if you choose to reject a previously approved image, cache invalidation will be performed for the image so it will be erased from all the CDN cache servers.

For more information, see the detailed documentation.

Moderating your user-generated images is important to protect your brand and keep your users and advertisers happy. With the Amazon Rekognition AI Moderation add-on, you can provide feedback within the upload stream and fine-tune the filters that you use to determine what kinds of images you deem acceptable or objectionable. Automated moderation can help you to improve photo sharing sites, forums, dating apps, content platforms for children, eCommerce platforms and marketplaces, and more.

The add-on is available with all Cloudinary plans, with a free tier for you to try it out. If you don’t have a Cloudinary account yet, sign up for a free account here.