Fidelity in images is about visually preserving the original; appeal is about hiding the compression artifacts. Depending on your priority, you would compress images with either of these approaches to reduce the file size while still maintaining a reasonable level of visual “quality”:

- If fidelity is more important, you’d aim at being visually lossless. That is, no difference is visible between the original and the compressed image.

- If appeal trumps, you’d want to avoid annoying compression artifacts like blockiness or color banding, shooting for a compressed image that just “looks good.” That is, barring a comparison with the original, compression modifies the image in a way that avoids tell-tale signs of lossy compression.

Image fidelity, in a broader sense, can refer to accurately rendering or digitizing an image without any visible distortion or information loss. This concept goes beyond merely preserving the original in a compressed form. It encompasses the ability to convert analog media to a digital image with high precision, ensuring that the digitized image remains true to the original in all its aspects.

It’s important to distinguish between image fidelity and image quality. Image quality often relates to subjective preferences for one image over another and can be influenced by factors like color, contrast, and general aesthetics. Image fidelity, on the other hand, is more objective and focused on the accuracy of image representation. This distinction is crucial in understanding the goals of different compression techniques.

In the past, fidelity and appeal meant basically the same thing: low fidelity implied low appeal, and the only way to achieve high appeal was through high fidelity. Consequently, the two notions became conflated into a single notion of “quality.” That’s no longer the case. Nowadays, advanced compression techniques can produce low-fidelity images with high appeal—something simpler formats like JPEG and color-quantized PNG just cannot deliver.

If appeal is the focus, aim for higher compression ratios by, for example, smoothing away subtle textures, significantly reducing the entropy of the image. This low-fidelity-high-appeal approach can be deceptive, however. Even though the image codec changes the image visibly for compression, the image still looks good, and you can’t tell that it’s been altered.

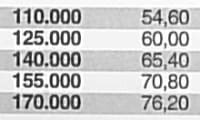

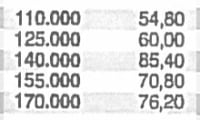

Consider this 22-KB AVIF:

Heavily compressed with a ratio of 87:1, it remains relatively sharp. For comparison, see this 22-KB JPEG:

You can immediately tell that this image has been compressed way too aggressively. That’s by far not as obvious in the AVIF.

Sure, if you compare the overcompressed AVIF to a high-fidelity version of the image, you’ll readily notice that a lot of the fine details have been removed, as shown here:

However, if you’ve never seen the original (as is the case for images on the web), you wouldn’t be privy to whether the woman’s skin texture is actually as smooth as it appears or whether that’s a compression artifact.

Let’s look at another example: a picture of The Red House in Youghal, Ireland:

It looks OK at a glance. However, a closer look at the left side of the house would reveal that the bricks in the wall have been eliminated with smoothing filters:

Serious errors could result if, in quest of a denser compression, image codecs change an image semantically. A well-known example is the problem Xerox encountered in 2013, when lossy JBIG2 compression caused numbers to mysteriously change in scanned documents, e.g., a 6 turned into an 8. That fiasco prompted German and Swiss regulators to, in 2015, forbid the use of JBIG2 compression altogether for legal documents.

The cause of those errors was JBIG2’s pattern-matching mechanism, which optimizes compression by reapplying similar patterns. Though a remarkable feature when used with care, that capability could lead to grave consequences if, say, it changes a Q on a license plate to an O or a 6 in a tax declaration to an 8.

Modern image codecs also offer coding tools that perform similar tasks. Examples are the intra block copy in AVIF and HEIC, and patches in JPEG XL—all effective and helpful tools when prudently used for high-fidelity encoding. In the case of low-fidelity, high-appeal encoding, however, they could churn out unexpected results.

Intuitively, people—even experts—evaluate image codecs based on the appeal of the compressed images. Typically, in subjective experiments, those images are placed next to the originals, and test subjects are asked to rate the visual quality according to the five-level scale described in Recommendation BT.500 of the International Telecommunication Union (ITU): 1 for “very annoying;” 2, “annoying;” 3, “slightly annoying;” 4, “perceptible but not annoying;” and 5, “imperceptible.” The ratings are then averaged to obtain the Mean Opinion Score (MOS).

Note that the evaluation scale refers to appeal, not fidelity. Hence, the question asked of test subjects is “How annoying are the artifacts?” and not “How true is the image to the original?” As long as the distortions are not annoying, the MOS is deemed acceptable.

Side-by-side comparisons produce meaningful results only at relatively low fidelity, whereby the difference between the images is obvious enough to be perceived by most. In fact, the whole premise of “spot the seven differences” puzzles is that it’s tough to compare two similar images side by side. For high-fidelity comparisons, you can conduct a flip test (aka flicker test), which is a much more sensitive evaluation methodology.

When evaluating image codecs based on MOS in side-by-side comparisons, you’d recognize the appeal of the codecs in low-fidelity, low-bitrate encodings. Even though that’s interesting insight, refrain from extrapolating many conclusions from it. That’s because codecs that are effective in low-fidelity, high-appeal compression—e.g., those that can achieve the highest MOS at 0.1 bit per pixel (bpp)—are not necessarily also good at high-fidelity compression, e.g., at 1 bpp. Similarly, objective perceptual metrics (e.g., PSNR, SSIM, VMAF, or SSIMULACRA) that correlate well with side-by-side, subjective results and are thus good at measuring appeal, are not necessarily as effective in measuring fidelity, and vice versa.

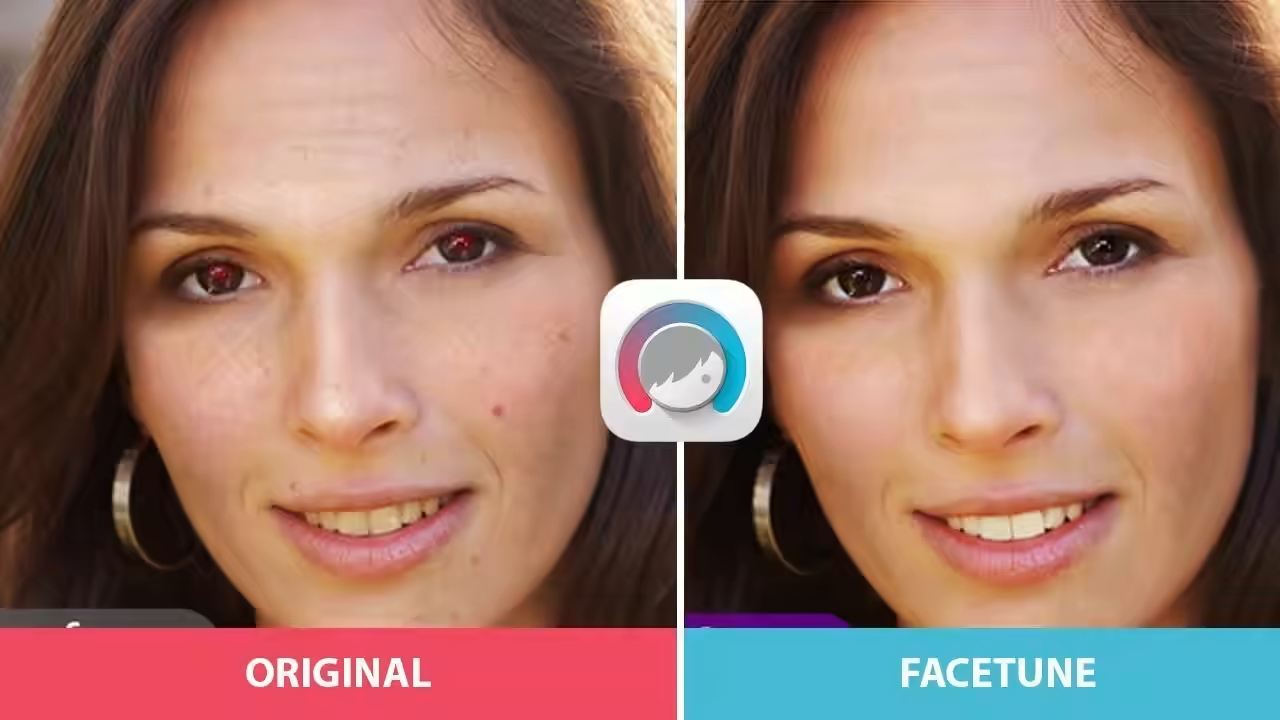

Unintentional side effects might emerge if you conflate appeal with fidelity. For example, low-fidelity, high-appeal compression tends to apply smoothing that, on human faces, could obscure the natural skin texture, just as foundation makeup does. That phenomenon can even occur at relatively high-quality compression settings if the codec was built and optimized for appeal, not fidelity.

Though a bit far-fetched, low-fidelity codecs might contribute to cultural beauty ideals by unintentionally but systematically photoshopping images to hide pores and wrinkles, hence subtly but effectively reinforcing the pressure on women, in particular, to conform to certain cosmetic ideals. The whole point of the body positivity movement is to stop retouching images to maintain unrealistic standards of feminine beauty like smooth skin, body size, and elimination of imperfections. It would be a social regression if, instead of stopping the photoshopping, such a task becomes obligatory and automated because that’s what the compression codec happens to do.

An example is this FaceTune, in which you can compare a real selfie (on the left) with a Facetune-retouched image (on the right). By applying low-fidelity, high-appeal AVIF compression on the original, you’d obtain a comparable result in terms of airbrushing:

This animation alternates between the original image and a low-fidelity AVIF version of it.

This animation alternates between the original image and a low-fidelity AVIF version of it.

All lossy image codecs apply different amounts of compression to the luma (the grayscale version of the image) and to the chroma (the color information). Since our eyes are less sensitive to chroma than to luma, those codecs tend to apply lossy compression more aggressively to the chroma. Even uncompressed digital images are represented in a quantized color space, where the number of shades of red, green, and blue is limited, typically to 256, i.e., 8 bits per channel. Many lossy image codecs then convert the RGB colors to YCbCr colors, effectively further reducing the number of shades of blue and red to about 100. And that’s before the real lossy part starts.

As a result, low-fidelity lossy compression can introduce subtle color shifts and cause more artifacts to appear in the dark regions of an image. In the case of images of humans, the skin tones can subtly change (for example, Asian skin tones could slightly desaturate and look less yellow), and images of people with a dark skin could suffer more from compression artifacts. Again, though obviously not by design, applying low-fidelity, high-appeal compression can produce unexpected and undesirable side effects.

In the future, those downsides might turn into a more serious issue. The current generation of classic image codecs are based on sophisticated but well-understood and predictable transformations and entropy coding mechanisms. However, highly experimental but promising are AI-based codecs that deploy neural networks for even more compression. Those approaches are not practical yet on the current hardware but might well be down the road. A big concern is that the related codecs can be extremely low in fidelity yet high in appeal, i.e., very deceptive. And since they are based on training on big datasets, they will inherit and possibly reinforce any bias that exists in those datasets. In other words, building those new AI-based codecs without accidentally making them “racist” or otherwise biased could be a big challenge, especially if the focus is on extreme compression ratios and low-fidelity, high-appeal compression.

For insight on “racist AI,” see these articles:

- The Race Problem With AI: “Machines Are Learning to Be “Racist”

- Is AI Doomed to Be Racist and Sexist?

- Stop Calling It Bias. AI Is Racist

- What a Machine Learning Tool That Turns Obama White Can (And Can’t) Tell Us About AI Bias

Perhaps my take above is overdramatic, but I strongly believe that the correct focus for image compression is fidelity, not appeal. As tempting as it is to reduce bandwidth through low-fidelity, high-appeal compression techniques, I recommend that you avoid adopting them. In this world of fake news coupled with malicious image manipulations, we really don’t need another potential source of deception, albeit unintentional.

For improving the browsing experience by accelerating image loads, progressive decoding is a better way. I’ll elaborate on that in a future post.

Take, for example, an e-commerce business. Low-fidelity, high-appeal compression of its product images might seem like a good idea at the outset given the lower bandwidth, with the images remaining sharp while loading faster, which translates to a better browsing experience for visitors and potentially greater sales growth. However, the low-fidelity compression process might have failed to preserve the precise color or subtle texture of the merchandise, such as a shirt’s fabric. The result? Unhappy customers, returns of purchases, and bad reviews.

Keep in mind that display devices can now more accurately reproduce images with enough pixels to match the resolution of the human eye. Also, color gamuts are trending wider, and contrast ratios and dynamic ranges are constantly improving. With all the outstanding display technology that promises to deliver superior image fidelity, it’d be a pity to then throw a big part of the fidelity out the window in deference to compression.

For video, you still need low-fidelity compression for a while given the enormous amount of pixels that video represents (15 gigapixels per minute in the case of a 4K video). But for still images, you can generally afford high-fidelity compression.

Currently, the median JPEG image on the web is compressed to about 1.9 bpp, i.e., a compression ratio of about 13:1. More modern image codecs can improve the compression density and, with the same fidelity, double that ratio. Remember, however, that you can and should also improve the fidelity by compressing images to, say, 1.2 bpp (20:1), simultaneously reaping the benefits of a higher fidelity and better compression.

Here’s what I suggest: don’t compress images with those new codecs to, say, 0.3 bpp (80:1), which can produce perfectly presentable images in a format like AVIF but at the cost of fidelity. That’s because at that point, though your bandwidth savings are more substantial, you’d be deceptively degrading your images. Even if such deception is acceptable for certain use cases, think twice before taking that step. After all, the cost savings might not be worth the unforeseen side effects of deceptive compression.

JPEG XL is a fit for high-fidelity image encoding by design. That format operates in an internal color space, XYB, which was specifically tailored for high-precision and perceptually modeled color encoding to avoid the issues that arise in more traditional YCbCr color spaces, e.g., dark color gradients and reds. Also, with JPEG XL’s coding tools, you can optimally preserve subtle image features. The codec excels in high fidelity up to mathematically lossless encoding.

Furthermore, the JPEG XL reference encoder can automatically perform high-fidelity encoding, which compresses images as much as possible with no visible differences. Traditional image encoders are configured based on technical parameters like quantization factors, which somewhat, but are not guaranteed to, correlate with fidelity. In contrast, the JPEG XL reference encoder is configured based on a perceptual fidelity target.

JPEG XL is competitive at medium fidelity. At low fidelity, it’s currently no match for the high appeal of competitors like AVIF and HEIC. Maybe that’s a feature, not a bug?