Web sites and platforms are becoming increasingly media-rich. Today, approximately 62 percent of internet traffic is made up of images, with audio and video constituting a growing percentage of the bytes.

The web’s ubiquity is making it possible to distribute engaging media experiences to users around the world. And the ecosystem of internet consumers and vendors have crafted APIs that enable developers to harness this power.

Cisco reports that 70 percent of current global internet traffic is video and it’s going to increase to 80 percent by 2020. This is huge!

In addition, considering the current trajectory of how media is consumed, it’s estimated that the next billion users will likely be all mobile. These new users will be diverse in every way; their location, cultural experience, level of computer expertise, connectivity and the types of devices via which they consume media content. Despite the facts about the next generation of users, the mobile web still has a lot of catching up to do.

Let’s take a look at these APIs and what the future holds for us with regard to audio and video on the web.

Consider how the mobile web has functioned overtime from the dot-com bubble. It didn’t play a big part in the audio and video innovation that has come so far. Why?

The mobile web was faced with a number of challenges:

-

Buffering: Flash was prevalent in the beginning of the web, when mobility wasn’t even a consideration. In most cases, we had large audio and video files sitting on servers. And when users tried to access them via the mobile web, it took a long time to download, thus making users wait endlessly for these files to play on their devices.

Eventually individuals and companies began delivering small-sized video files to mobile users. There was no buffering, but the video quality was poor.

-

Bad Layout: Delivering video content via mobile was terrible in terms of the layout. In fact, there are many websites that still haven’t figured out the optimal way to deliver video content to mobile users. Most times, you still have to scroll the far end left or right of the mobile web page. Sometimes, a part of the video screen gets cut off except when you were using an IPhone.

-

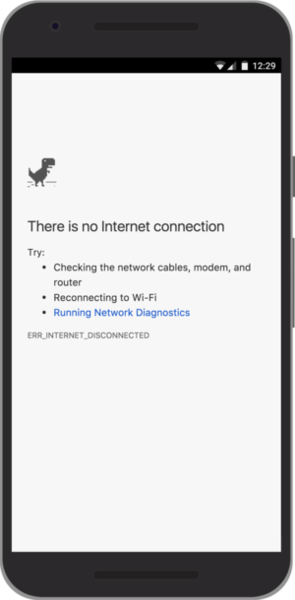

No Offline Capabilities: There was no offline experience for users listening to audio or watching videos. Without internet connectivity, the video/audio stops playing. In many regions, low-end devices are common, and internet connections are very unreliable. In fact, many users in developing countries still find it hard to purchase data. But the mobile web had no capacity for caching and serving content while offline.

It’s no secret that the pace of innovation has accelerated for audio and video in the last decade and the mobile web is now providing a better user experience.

To ensure a great video experience, it must offer:

- Fast playback

- Ability to watch videos anywhere

- Great user interface

- High-quality video playback

Therefore, let’s take a good look at what is available today to provide great video experiences for the next billion users.

It’s well-known that if your video buffers, you lose viewers. The same goes when your video doesn’t start playing fast enough. So to please your viewers, you need to be able to quickly deliver videos.

One way of ensuring fast playback is using the Adaptive Bitrate Streaming technique. This technique enables videos to start quicker, with fewer buffering interruptions. Multiple streams of the video with different resolutions, qualities and bitrate are created, and during playback, the video player determines and selects the optimal stream in response to the end user’s internet connectivity. The video player automatically switches between variants (also known as streams) to adapt to changing network connections.

Today, we have services like Cloudinary that provide plug-and-play adaptive bitrate streaming with HLS and Mpeg-dash to enable fast video playback. And Cloudinary supports a lot of transformations you can apply to videosand deliver to users with minimal efforts.

In addition, startup-time for videos is very important. One good technique to employ when serving videos with little or no delay in startup time is precaching videos with service workers. With service workers, you can precache all your videos on page load before users start interacting with the videos. Cloudinary also delivers video via fast content delivery networks (CDNs), which provide advanced caching techniques that offloads the caching from the developer.

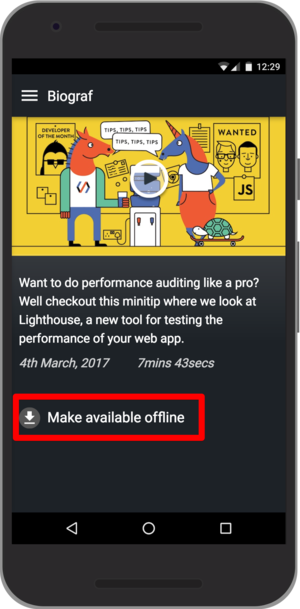

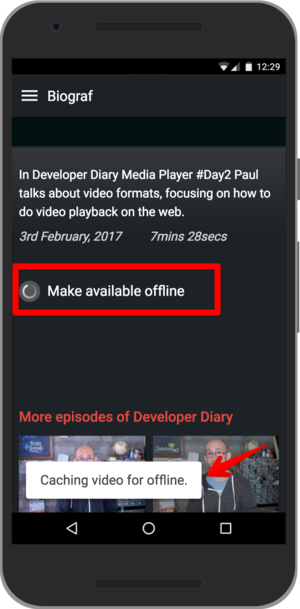

Users should be able to watch videos or listen to audio offline. Again, with Service Workers, you can control the logic of what version(s) of video or audio file users should download. A simple save for offline viewing/listening button can be created that once the user clicks it, the service worker caches the video and makes it available when there is no connectivity.

Another tool you can use with the service worker is background fetch. By default, service workers are killed when the user navigates away from a site or closes the browser. With background fetch, downloads are enabled in the background while the user navigates away.

Imagine being able to cut down your video file size by 40 percent, while preserving the same quality. Today, we have VP9, the WebM project’s next-generation open source video codec. VP9 offers:

- A 15 percent better compression efficiency

- Supported on more than 2 billion devices

- Higher quality videos

Google’s VP9 codec was mainly used on YouTube before general adoption by the public. This compression technology amassed great gains for YouTube, which saw a video starting 15 to 18 percent faster using VP9 compared to other codecs, and 50 percent less buffering. Native support for VP9 video codec already exists across media devices, laptops and browsers. Another codec worthy of mention is HEVC (High Efficiency Video Coding), also known as H.265. It is a successor to AVC (Advanced Video Coding). It offers very good compression benefits while retaining quality. And it is processor intensive. The licensing fees are one of the main reasons HEVC adoption has been low on the web.

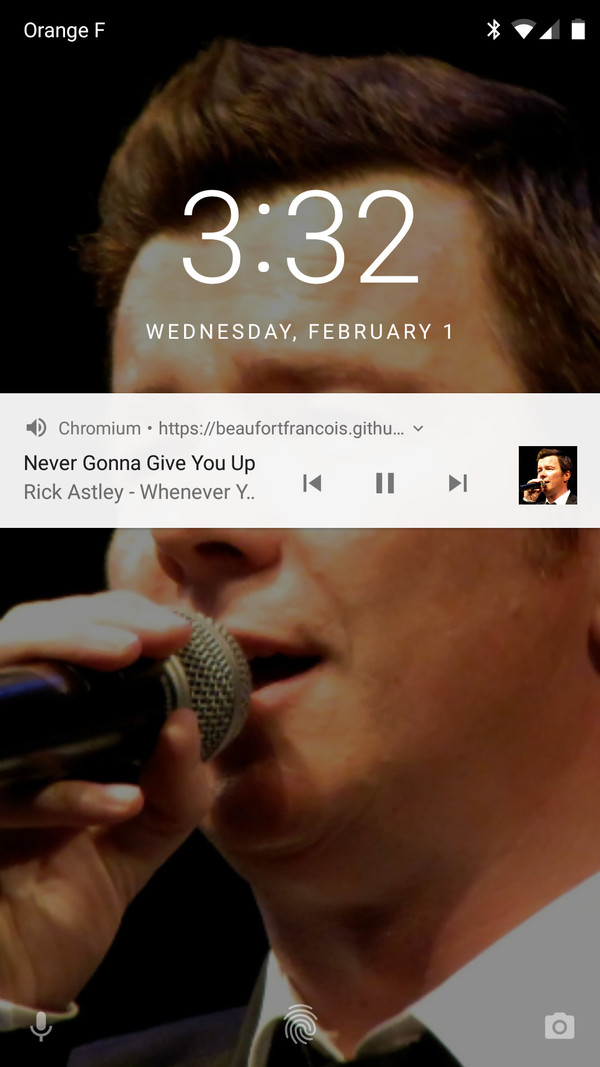

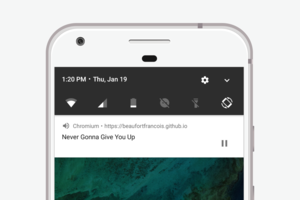

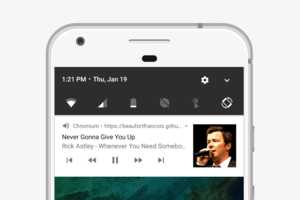

Today, we have the opportunity to provide even better experiences for our users. One of these experiences is enabling the user to listen to/control an audio or video playlist from a device’s lock screen or from the notifications tab. How is that possible? It’s via the Media Session API. The Media Session API enables you to customize media notifications by providing metadata for the media your web app is playing. It also enables you to handle media-related events, such as seeking or track changing, which may come from notifications or media keys.

*Source: developers.google.com*set media session

function setMediaSession() {

if (!('mediaSession' in navigator)) {

return;

}

let track = playlist[index];

navigator.mediaSession.metadata = new MediaMetadata({

title: track.title,

artist: track.artist,

artwork: track.artwork

});

navigator.mediaSession.setActionHandler('previoustrack', playPreviousVideo);

navigator.mediaSession.setActionHandler('nexttrack', playNextVideo);

navigator.mediaSession.setActionHandler('seekbackward', seekBackward);

navigator.mediaSession.setActionHandler('seekforward', seekForward);

}

Code language: JavaScript (javascript)Another core part of an improved user experience is automatic fullscreen mode capabilities. When a user changes the orientation of the phone to landscape, the video player should automatically assume full screen mode. This capability is made possible by the [Screen Orientation API}(https://developer.mozilla.org/en/docs/Web/API/Screen/orientation).

var orientation = screen.orientation || screen.mozOrientation || screen.msOrientation;

orientation.addEventListener('change', () => {

if (orientation.type.startWith('landscape')) {

showFullScreenVideo();

} else if (document.fullScreenElement) {

document.exitFullscreen();

}

});

Code language: JavaScript (javascript)We have explored various options available to provide an enhanced media experience for users in today’s world. Let’s quickly look at what we can expect in the future and how to prepare for it.

There are new sets of standards designed to enhance the display of colors on our devices – from phones to TVs to monitors and more.

You may not realize that your display screens can not correctly reproduce all the colors you can see with your eyes. The new video standards around BT.2020 dramatically extend these colors.

Today’s standard monitors have some challenges. They don’t have the capacity to display the full spectrum of brightness and blackness. In essence, blacks aren’t really that black and the brights aren’t really that bright. However, a new set of functions for converting digital values of brightness into what actually gets displayed on the screen are on the horizon.

The ability to know which devices can support High Dynamic Range (HDR) is key. Currently it is possible to do that with Chrome Canary.

var canPlay = MediaSource.isTypeSupported(VP9.0.0.1);

Code language: JavaScript (javascript)The Alliance Media group which consists of YouTube, Google, Amazon, Microsoft, Twitch, Mozilla, Hulu, Netflix and The BBC is now working on a new open source compression format to target HDR, wide color gamut, m4k, and 360 video, as well as providing the most demanding low bitrate solution imaginable for the billions of people who have slow internet connections.

This new format is the AV1 codec. As at the time of this writing, the codec is still in development, but there have been signs of significant progress so far. One of such noteworthy sign is that the AV1 codec is already achieving 20 percent better compression than VP9.

For VP9, there is support to determine if a device supports the codec and if it has the necessary hardware to render the frames and efficiently use power. Check this out:

if ('mediaCapabilities' in navigator) {

// Get media capabilities for 640x480 at 30fps, 10kbps

const configuration = { type: 'file', video: {

contentType: 'video/webm; codecs="vp09.00.10.08"',

width: 640,

height: 480,

bitrate: 10000,

framerate: 30

}};

navigator.mediaCapabilities.decodingInfo(configuration).then((result) => {

// Use VP9 if supported, smooth and power efficient

vp9 = (result.supported && result.smooth && result.powerEfficient);

});

}

Code language: JavaScript (javascript)The demand for 360 videos and photos is gradually increasing. Social media has amplified the need for this type of videos. Furthermore, documentaries, adverts, real estate industries, wedding events have started adopting it more. Just recently, Vimeo announced the launch of 360 video on their platform. Users can now stream and sell 360 degree video content.

In the future, there will be massive adoption of 360 video content across different platforms. Kodak, Giroptic and various vendors are increasingly developing better and high quality cameras & devices to capture superior 360 video experience.

Mark Zuckerberg once stated that Live video is the future. And he was not wrong! The likes of Facebook Live, Instagram Live, and Twitch has made more people content creators and brought a lot more people closer to the screen. Apps such as Periscope, Meerkat, YouNow and Blab are allowing people share their daily experiences live. In terms of growth of users and revenue, Youtube, Facebook and Twitter have seen massive adoption and experienced higher revenue as a result of incorporating live video features to their platform.

Right now, with a good 4G connection, you can just tap on a button on these social media platforms and you are live. In the future, it will be easier for people even with 3G connections or less to stream live or participate in a live video session!

The mobile web can do now what mobile apps already have the capability to do. A very good example is the mini-Snapchat clone, the mustache app created by Francois Beaufort. This web app can be added and launched from the home screen.

With Mustache, you can record a video of yourself with a mustache and shapes locating different parts of your face. It generates a preview of the recorded video and then you can share it with the world.

You need to enable the experimental web platform API from your Chrome browser. Head over to chrome://flags and activate it from the list before trying to run the app.

Let’s analyze the APIs that made this mobile web app possible:

const faceDetector = new FaceDetector({ fastMode: true, maxDetectedFaces: 1 });

let faces = [];

let recorder;

let chunks = [];

let isDetectingFaces = false;

let easterEgg = false;

let showFace = false;

async function getUserMedia() {

// Grab camera stream.

const constraints = {

video: {

facingMode: 'user',

frameRate: 60,

width: 640,

height: 480,

}

};

video.srcObject = await navigator.mediaDevices.getUserMedia(constraints);

await video.play();

canvas.height = window.innerHeight;

canvas.width = window.innerWidth;

// HACK: Face Detector doesn't accept canvas whose width is odd.

if (canvas.width % 2 == 1) {

canvas.width += 1;

}

setTimeout(_ => { faces = []; }, 500);

draw();

}

Code language: JavaScript (javascript)We have the FaceDetector API and and the mediaDevices API for camera web video streaming available as experimental web platform APIs on Chrome, Mozilla and Microsoft edge.

Cloudinary provides an advanced Facial Attribute detection API as an add-on that you can use in your web applications.

The experimental Shape Detection API enabled the possibility of drawing a mustache and hat at 60 fps on the screen while streaming the video. While, the Media Recorder API provides functionality to easily record media.

{note} Cloudinary provides an advanced OCR Text Detection and Extraction API as an add-on that you can use in your web applications. {/note}

In the source code of the mustache app, this is how the blob, which is the recorded video is been uploaded to the cloud:

async function uploadVideo(blob) {

const url = new URL('https://www.googleapis.com/upload/storage/v1/b/pwa-mustache/o');

url.searchParams.append('uploadType', 'media');

url.searchParams.append('name', new Date().toISOString() + '.webm');

// Upload video to Google Cloud Storage.

const response = await fetch(url, {

method: 'POST',

body: blob,

headers: new Headers({

'Content-Type': 'video/webm',

'Content-Length': blob.length

})

});

const data = await response.json();

shareButton.dataset.url = `https://storage.googleapis.com/${data.bucket}/${data.name}`;

}

Code language: JavaScript (javascript)With Cloudinary, you can upload videos directly from the browser to the cloud and have the video URL returned to you with a simple API. You also can apply transformations on the video.

The APIs mentioned in the building of this app are pretty experimental and are currently being developed. But we expect they will become mainstream and stable in the future. Check out the source code for the full implementation.

Over the last few years, the web has seen a lot of innovation and advancement. Flash will no longer be supported by Adobe in 2020, HTML5 is now mainstream, several APIs have been, or are currently being, developed to bring native capabilities to the web. Better video codecs have come on board to ensure smooth and more efficient delivery and display of audio and video to users.

I’m really excited for what the future holds. Much of the information in this article came from Google I/O’s powerful session on the future of audio and video on the web. Many thanks to Google and the developer community for always championing the progressive movement of the web.

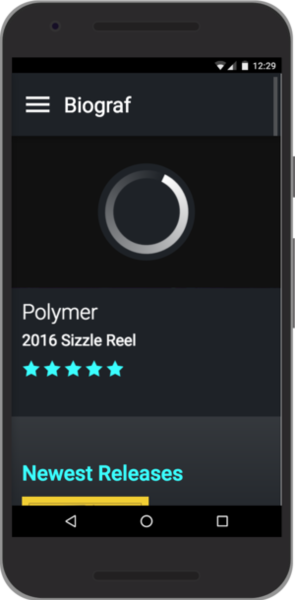

Finally, a huge shout out to the amazing Paul Lewis for developing the progressive video-on-demand Biograf app. The source code is available on GitHub.

- Video Transcoding and Manipulation

- Top 10 Mistakes in Handling Website Videos and How to Solve Them

- How to Compress Video Size Automatically With One Line of Code

- ExoPlayer Android Tutorial: Easy Video Delivery and Editing

- Ultimate HTML5 Video Player Showdown: 10 Players Compared

- How to Generate Waveform Images From Audio Files

- Auto Generate Subtitles Based on Video Transcript

- Auto-Generate Video Previews with Great Results Every Time

- Adaptive HLS Streaming Using the HTML5 Video Tag

- Video Optimization With the HTML5 <\video> Player

- Converting Android Videos to Animated GIF Images With Cloudinary: A Tutorial