The last time you scrolled through the feed on your favorite social site, chances are that some videos caught your attention, and chances are, they were playing silently.

On the other hand, what was your reaction the last time you opened a web page and a video unexpectedly began playing with sound? If you are anything like me, the first thing you did was to quickly hunt for the fastest way to pause the video, mute the sound, or close the page entirely, especially if you were in a public place at the time.

If you identify with these scenarios, you are far from alone. A huge proportion of the viewers on social sites and other media-heavy platforms choose to view video without sound. In fact, 2016 studies show that on Facebook, around 85% of video was viewed with the sound off.

But when you are the developer of a website or mobile app with lots of user-generated video content, the consumer expectation for silent video becomes a challenge. All your app users who want to upload their videos of recipes, art projects, makeup tips, travel recommendations, or how to…[anything] are generally very reliant on accompanying explanations to capture and keep attention.

The solution? Subtitles, of-course. Even better? Auto generated subtitles!

Cloudinary, the leader in end-to-end image and video media management, has released the Google AI Video Transcription Add-on, so you can easily offer automatically generated subtitles for your users’ (or your own) videos.

This is part of a series of articles about video optimization.

When people scroll through posts or search results with multiple autoplay videos, a particular video has only a second or two to capture viewers’ attention. And since the video creators can’t rely on sound in most cases, it’s almost mandatory to provide text captions to get their viewers interested and to keep them watching, and maybe even to get them interested enough to click on the video and watch (with or without sound) till the end.

The Video Transcription add-on lets you request automatic voice transcription upon upload of any video (or for any video already in your account). The request returns a file containing the full transcript of your video, exactly aligned to the timings of each spoken word.

The auto generated subtitles are created using Google’s Cloud Speech API, which applies their continuously advancing artificial intelligence algorithms to maximize the quality of the speech recognition results.

When you deliver the video, you can automatically include its transcript in the form of subtitles.

To request the transcript of a video upon upload (once you’ve registered for the transcription add-on), just set the raw_convert upload parameter to google_speech. Since it can sometimes take a while to get the transcript back from Google, you may also want to add a notification_url to the request, so you can programmatically check when it’s ready:

Once you’ve verified that the raw .transcript file has been generated, you can deliver your video with the subtitles. Just add a subtitles overlay with the transcript file name. (It has the same public ID as the video, but with a .transcript extension.)

If you want to get a little fancier, you can also customize the text color, outline color, and display location (gravity) for the subtitles:

Subtitles are great, but if you’ve already got the transcript file, why not parse it to generate an HTML version that you can show on your web page? This is great for making video content more skimmable and SEO-friendly.

Here’s a really simple Ruby script that does exactly that:

require 'json'

class TranscriptUtil

#function receives the transcript input file, path of HTML output file,

#max words to include per timestamp (default 40),

#and line break for longer entries (default 10).

def convert(transcript_file, html_file, max_words=40, break_counter=10)

# read and parse the transcript file

file = File.read(transcript_file)

data = JSON.parse(file)

index = 0

elements = []

elementIndex = 0

words_count = 0

start_col = "</br><td>"

end_col ="</td>"

elements[elementIndex] = "<tr>"

data.map do |d|

d['words'].map do |group|

if(index % max_words == 0)

elementIndex += 1

#define the timestamp string format Time.at(group['start_time']).utc.strftime("%H:%M:%S")

start_time = " --- " + Time.at(group['start_time']).utc.strftime("%H:%M:%S").to_s + " ---"

#build the html content

elements[elementIndex] = "<br>" + start_col + start_time + end_col + start_col

words_count = 0

end

if(words_count == break_counter)

elements[elementIndex] += end_col + start_col

words_count = 0

end

elements[elementIndex] += group['word'].to_s.strip + " "

index += 1

words_count += 1

end

end

elements[elementIndex+1] = "</tr>"

#save the html content in a new html file

File.new(html_file, "w+")

File.open(html_file, "w+") do |f|

f.puts(elements)

end

end

end

Code language: HTML, XML (xml)You can run the script with the following command:

ruby -r "./transcript_to_html.rb" -e "TranscriptUtil.new.convert('lincoln.transcript','./lincoln_transcript.html',20,10)"

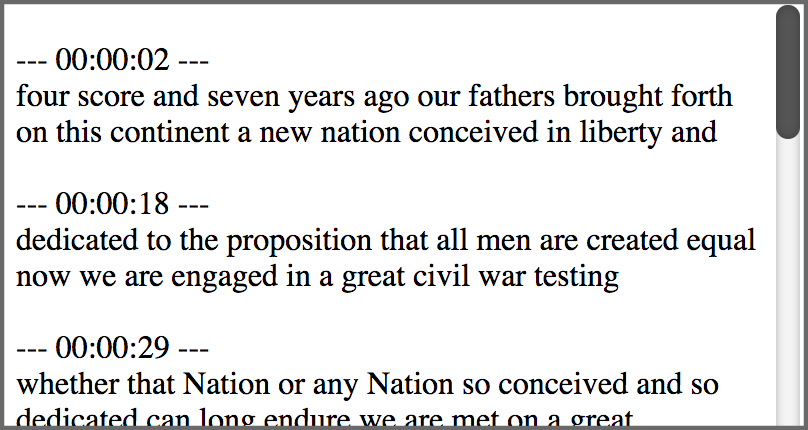

Code language: JavaScript (javascript)This very simple script outputs basic HTML that looks like this:

Of course for a production version, I’m sure you’d generate something that looks much nicer. We’ll leave the creative design to you.

If you are feeling particularly adventurous, you can even add synchronization capabilities between the textual display of the transcript and the video player, so that your viewers can skim the text and jump to the point in the video that most interests them. You also sync the other way, making the displayed text scroll as the video plays, and even highlight the currently playing excerpt.

Demonstrating these capabilities is beyond the scope of this post, but we challenge you to try it yourself! We’ve given you everything you need:

The Cloudinary Video Player can capture events and trigger operations on a video. Use the player in conjunction with the Google-powered AI Video Transcription Add-on, add a bit of javascript magic, and you’ll be on your way to an impressive synchronized transcript viewer on par with YouTube and other big players in the video scene.

As it becomes more and more commonplace to use videos as a way to share information and experiences, the competition to win viewers’ attention becomes increasingly tough. The Video Transcription add-on is a great way to offer your users automatic subtitles for their uploaded videos, so they can grab their audience’s attention as soon as their silent video begins to autoplay. Oh, and it’s great for podcasts too.

To watch it in action, jump over to the Cloudinary Video Transcoding Demo. Select one of the sample videos or upload your own, and then scroll down to the Auto Transcription section to see the transcription results. And while you’re there, check out the many cool video transformation examples as well as a demonstration of the Cloudinary Video Tagging add-on.

But the real fun is in trying it yourself! If you don’t have a Cloudinary account yet, sign up for free. We’d love to see your demos of the sync implementation suggested above. Please add a link in the comments to show off your results!

- Optimizing Video with Cloudinary and the HTML5 Video Player

- ExoPlayer Android Tutorial: Easy Video Delivery and Editing

- How to Generate Waveform Images From Audio Files

- Auto Generate Subtitles Based on Video Transcript

- Automated Generation of Intelligent Video Previews on Cloudinary’s Dynamic Video Platform

- Converting Android Videos to Animated GIF Images With Cloudinary: A Tutorial

- Tips for Retaining Audience Through Engaging Videos

- Product Videos 101: What Makes Them Great?