Google AI Video Transcription

Last updated: Jul-21-2025

Cloudinary is a cloud-based service that provides an end-to-end image and video management solution including uploads, storage, transformations, optimizations and delivery. Cloudinary's video solution includes a rich set of video transformation capabilities, including cropping, overlays, optimizations, and a large variety of special effects.

With the Google AI Video Transcription add-on, you can automatically generate speech-to-text transcripts of videos that you or your users upload to your product environment. The add-on applies powerful neural network models to your videos using Google's Cloud Speech API to get the best possible speech recognition results. The add-on supports transcribing videos in almost any language.

You can parse the contents of the returned transcript file to display the transcript of your video on your page, making your content more skimmable, accessible, and SEO-friendly.

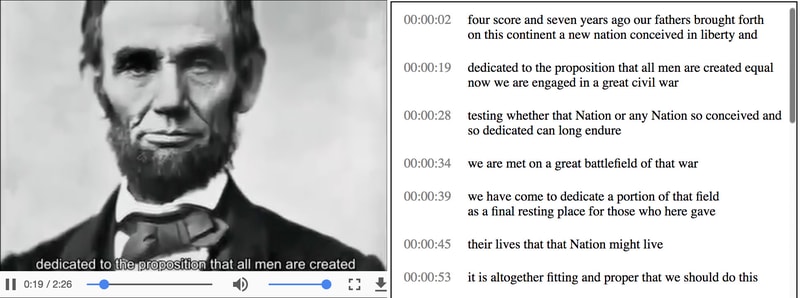

When you deliver the videos, it only takes a single URL parameter to automatically insert the generated transcript into your video in the form of subtitles, which are exactly aligned to the timing of each spoken word. Alternatively, you can specify the (optionally) returned vtt or srt file as a video track so that users can toggle the subtitles on or off.

Getting started

Before you can use the Google AI Video Transcription add-on:

You must have a Cloudinary account. If you don't already have one, you can sign up for a free account.

Register for the add-on: make sure you're logged in to your account and then go to the Add-ons page. For more information about add-on registrations, see Registering for add-ons.

Keep in mind that many of the examples on this page use our SDKs. For SDK installation and configuration details, see the relevant SDK guide.

If you're new to Cloudinary, you may want to take a look at the Developer Kickstart for a hands-on, step-by-step introduction to a variety of features.

Requesting video transcription

To request a transcript for a video or audio file (in the default US English language), include the raw_convert parameter with the value google_speech in your upload or update call. (For other languages, see transcription languages below.)

For example:

Learn more: Upload presets

The google_speech parameter value activates a call to Google's Cloud Speech API, which is performed asynchronously after your original method call is completed. Thus your original method call response displays a pending status:

When the google_speech request is complete (may take several seconds or minutes depending on the length of the video), a new raw file is created in your product environment with the same public ID as your video or audio file and with the .transcript file extension. You can additionally request a standard subtitle format such as 'vtt' or 'srt'.

If you also provided a notification_url in your method call, the specified URL then receives a notification when the process completes:

Transcription languages

If your video/audio file is in a language other than US English, you can request transcription in the relevant language and (optionally) region/dialect.

For example, to request a video transcript in Canadian French when uploading the video abt_cloudinary_french.mp4:

You can specify just the 2 character language code or the full language + region code. For a full list of supported language and region codes, see the Google Cloud speech-to-text language support list.

Cloudinary transcript files

The created .transcript file includes details of the audio transcription, for example:

Each excerpt of text has a confidence value, and is followed by a breakdown of individual words and their specific start and end times.

Subtitle length and confidence levels

Google returns transcript excerpts of varying lengths. When displaying subtitles, long excerpts are automatically divided into 20 word entities and displayed on two lines.

You can also optionally set a minimum confidence level for your subtitles, for example: l_subtitles:my-video-id.transcript:90. In this case, any excerpt that Google returns with a lower confidence value will be omitted from the subtitles. Keep in mind that in some cases, this may exclude several sentences at once.

Generating standard subtitle formats

If you want to include the transcript as a separate track for a video player, you can also request that cloudinary create an SRT and/or WebVTT raw file by including the srt and/or vtt qualifiers (separated by a colon) with the google_speech value. For example, to upload a video and also request both srt and vtt files with the transcript:

When the request completes, there will be four files associated with the uploaded video in your product environment:

- If you also specify a language in the

google_speechtranscript request:

- the request for format must be given before the language (e.g.,google_speech:srt:vtt:ar-SA)

- the transcript files will include the language and region code in the generated filename (e.g.,lincoln.fr-FR.vtt). - While Google's speech recognition artificial intelligence algorithm is very powerful, no speech recognition tool is 100% accurate. If exact accuracy is important for your video, you can download the generated

.transcript,.srtor.vttfile, edit them manually, and overwrite the original files.

Important: Depending on your product environment setup, overwriting an asset may clear the tags, contextual, and structured metadata values for that asset. If you have a Master admin role, you can change this behavior for your product environment in the Media Library Preferences pane, so that these field values are retained when new version assets overwrite older ones (unless you specify different values for thetags,context, ormetadataparameters as part of your upload).

Displaying transcripts as subtitle overlays

Cloudinary can automatically generate subtitles from the returned transcripts. To automatically embed subtitles with your video, add the subtitles property of the overlay parameter (l_subtitles in URLs), followed by the public ID to the raw transcript file (including the extension).

For example, the following URL delivers the public domain video of Lincoln's Gettysburg Address with automatically generated subtitles:

Formatting subtitle overlays

As with any subtitle overlay, you can use transformation parameters to make a variety of formatting adjustments when you overlay an automatically generated transcript file, including choice of font, font size, fill and outline color, and gravity.

For example, these subtitles are displayed using the Impact font, size 15, in a khaki color with a dark brown background, and located on the bottom left (south_west) instead of the default centered alignment:

Displaying transcripts as a separate track

Instead of embedded a transcript in your video as an overlay, you can alternatively add returned vtt or srt transcript files as a separate track for a video player. This way, the subtitles can be controlled (toggled on/off) separately from the video itself. For example, to add the video and transcript sources for an HTML5 video player:

textTracks parameter. Ask AI

Ask AI