When the JPEG codec was being developed in the late 1980s, no standardized, lossy image-compression formats existed. JPEG became ready at exactly the right time in 1992, when the World Wide Web and digital cameras were about to become a thing. The introduction of HTML’s <img> tag in 1995 ensured the recognition of JPEG as the web format—at least for photographs. During the 1990s, digital cameras replaced analog ones and, given the limited memory capacities of that era, JPEG became the standard format for photography, especially for consumer-grade cameras.

Since then, there’ve been several attempts to “replace JPEG” with a new and enhanced codec:

- JPEG 2000, which, after finding its niche in medical imagery, digital cinema, and, to a degree, the Apple ecosystem, did not attain wide adoption.

- JPEG XR (aka WDP), which never took off outside the Microsoft ecosystem.

- Google’s WebP, which was designed for web images and which, disappointingly, took 10 years to win support from all major browsers. Its adoption is still way lower than that of JPEG.

- The patent-encumbered HEIC, which is unlikely to be enthusiastically embraced outside the Apple ecosystem.

- WebP 2, AVIF, and JPEG XL, newcomers all. Only time will tell if any of them will supersede JPEG.

Besides yielding stronger compression than JPEG, the above codecs offer novel technical features like support for alpha transparency. Yet none of them succeeded in replacing JPEG. It’s important to understand why they failed and what it will take to succeed.

JPEG moves the needle by a lot, going from uncompressed or weak, lossless compression— state of the art in the early 1980s—to an actual lossy codec, dramatically reducing file sizes and making itself a clear no-brainer for adoption. To put things in perspective, that meant waiting for five seconds instead of one minute for an image to load, and storing 20 to 50 images instead of only one or two on a flash card. Basically, JPEG enabled the use cases of web images and digital cameras, which would be impracticable without JPEG.

No new codec can ever match JPEG to that extent. Slightly stronger compression is not enough to justify the complications and manpower involved in updating all the image-related software and platforms, which is nearly all software and all platforms. That’s probably partly why JPEG 2000 and JPEG XR never really took off. Even though they compress images more effectively than JPEG and offer nice features like alpha transparency and high bit-depth, the considerable transition costs by far overshadow the benefits.

Even if a new codec affords significant technical advantages over the old one, the following obstacles prevent a switch:

-

The new codec might be patent encumbered and not royalty free. Examples are HEIC and BPG. Patent restrictions alone are a significant deterrent for universal adoption. Although all JPEG patents have already expired, only the royalty-free part of the original JPEG spec is in use.

-

Free and open-source software (FOSS) implementations either do not exist or are inferior to proprietary software, which has long been a roadblock for JPEG 2000 adoption. Ditto for JPEG XR, whose open-source implementation is poorly maintained and not integrated into other FOSS, possibly because of licensing issues.

-

Even if the new codec yields better results, the old one still outflanks it in some respects. Three examples:

- PNG, for lack of animation, has failed to fully replace GIF as originally intended.

- WebP forces 4:2:0 chroma subsampling, does not support progressive decoding, and limits image dimensions.

- JPEG 2000 is complex and often slower than JPEG.

-

Ever present is the chicken-and-egg problem to convince browsers and other software to support new codecs. If nobody is using the new codec yet, why should they support it? And if they don’t support it, why would anyone use it?

Above all, a major obstacle is that existing software already supports JPEG, and JPEG images abound. Unlike the adoption of JPEG, which started from a clean slate, replacing an old codec with a new one involves a transition process. Transition problems are a fact of life.

Suppose new Codec X can save 50 percent of bytes, causing a 1-GB JPEG album to take up only 500 MB with the same fidelity. Great, right? Absolutely, assuming that you start creating digital images from scratch, but that’s not how it works.

The example of that 1-GB album of JPEGs is your baseline, and the original images are long gone, not available for doing an alternative encoding using Codec X. Of course, you can decode the existing JPEGs to pixels, which you can then encode with Codec X—that’s a typical transcoding process. But it’s a risky approach because it causes generation loss with the artifacts of Codec X on top of the existing JPEG artifacts.

Additionally, you must pinpoint the optimal quality setting for the Codec X encoding. Too low a setting would cause the already lossy image to become even lossier, possibly ruining the image. Too high a setting would make the Codec X file larger than the original JPEG file. In particular, if the JPEG was already of relatively low quality, then Codec X might actually need to expend many bytes to preserve the JPEG artifacts, again potentially leading to a bigger file than the original JPEG. As a remedy, you could configure Codec X with a low-enough quality setting for a smaller file, but then you’d exacerbate an already lossy image.

So, to preserve fidelity, reduce storage, and automate the task, converting a lossy image collection to a new lossy codec is a daunting undertaking. Trillions of JPEG images are around—each of the past five years saw a creation of over a trillion of them—yet most new codecs cannot tackle them satisfactorily. The safest thing to do is just keep them as they are and encode only new images with a new codec. However, if your goal is to replace JPEG, that’s far from being an ideal situation.

Transitioning the format of images takes a long period of time, during which only part of your audience will have updated their software to support the new format. Navigating this transition period is the main challenge in adopting new codecs.

During the changeover, you need the files for both the old JPEG and the new Codec X—the former for those who haven’t upgraded yet and the latter for those who have and can benefit from the reduced bandwidth. For the example of the 1-GB JPEG album, you must retain it plus 500 MB of Codec X images. So, despite the bandwidth savings from serving smaller images to some people, the stronger compression paradoxically results in more storage: 1.5 GB instead of 1 GB!

And that’s assuming that Codec X can actually reduce the file size by 50 percent. The newest generation of codecs (AVIF, HEIC, WebP2, and JPEG XL) can indeed do that, but not the previous generations (JPEG 2000, JPEG XR, WebP), which can deliver a saving of only 20-30 percent. For the 1-GB example, that means a storage growth from 1 GB to 1.7–1.8 GB.

From its start, the JPEG XL Project took into account those transition problems and designed the format to overcome them as much as feasible.

JPEG XL is a superset of JPEG. Most important, even with its many under-the-hood coding tools that produce superior compression, the JPEG XL developers preserved all the JPEG coding tools. Consequently, all JPEG image data can also be represented as JPEG XL image data, thanks to the following:

-

JPEG is based on the 8×8 discrete cosine transform (DCT) with fixed quantization tables. In contrast, JPEG XL boasts a much more powerful approach, which includes variable DCT sizes from 2×2 to 256×256 and adaptive quantization, of which the simple JPEG DCT is merely a special case.

-

JPEG XL uses a novel internal color space (called XYB) for high-fidelity, perceptually optimized image encoding, but it can also handle the simple YCbCr color transformation applied by JPEG.

Hence, to convert them to JPEG XLs, you need not decode JPEGs to pixels. Instead, depict the internal JPEG representation (DCT coefficients) directly in JPEG XL. Even though, in this case, only the subset of JPEG XL that corresponds to JPEG is leveraged, the converted images would be 20 percent smaller. Crucially, those JPEG XL files represent exactly the same images as the original JPEG files. Plus, the conversion is reversible: you can restore those JPEG XL files to JPEG files with minimal computation—so fast that you can do it on the fly.

JPEG XL’s legacy-friendly feature is a game-changer for the transition problems described above. Besides saving both storage and bandwidth from the outset, you can also losslessly preserve legacy images while reaping more compression. In other words, JPEG XL offers only benefits from the start, whereas other approaches require sacrifices in storage to reduce bandwidth. Click here to get a free forever Cloudinary account.

JPEG XL is the first “JPEG-replacement” candidate with a plausible transition path.

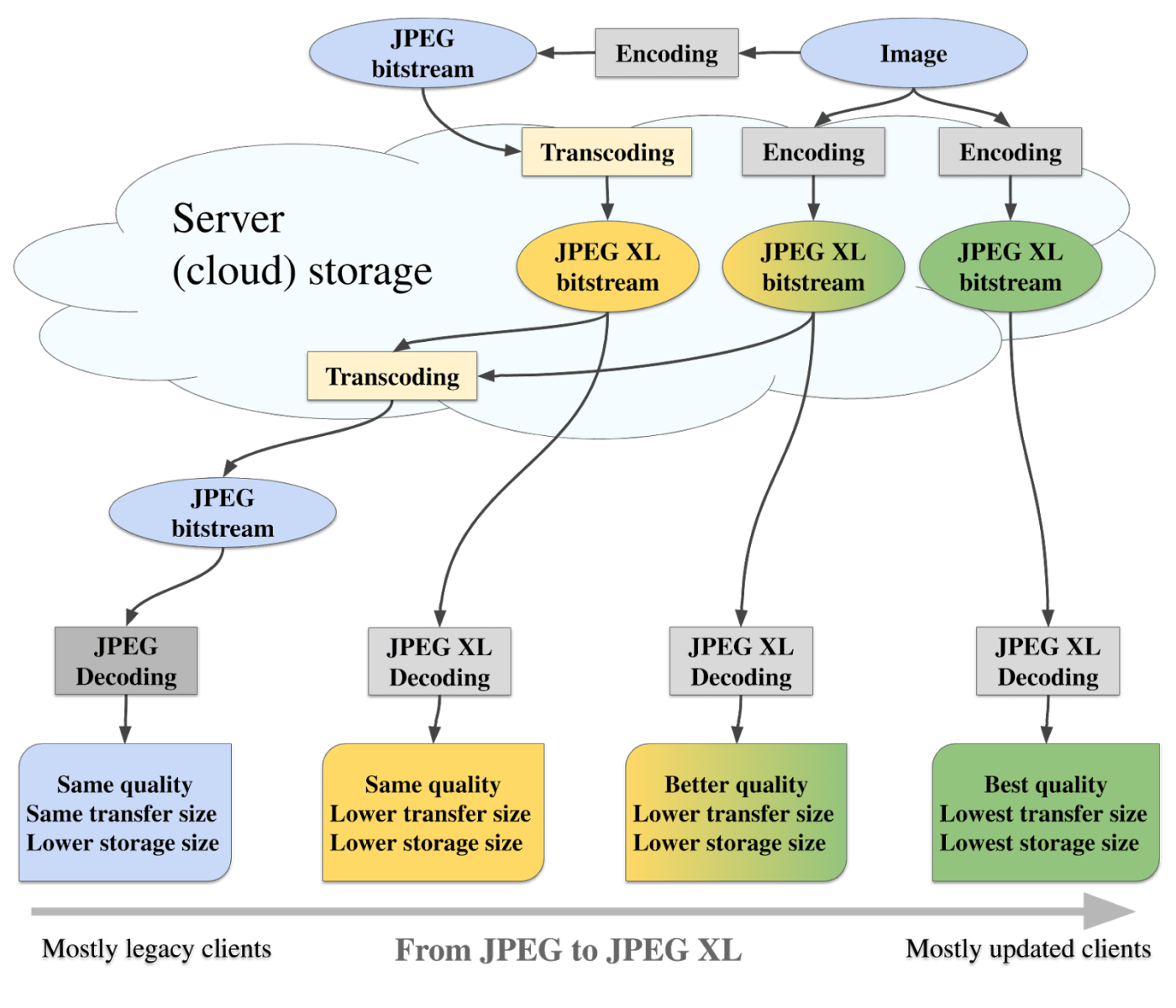

Initially, the server uses JPEG XL just to save storage, which, however, is a nontrivial incentive. Since all or most clients don’t support JPEG XL yet, it’s only a storage format that’s transcoded back to JPEG on the fly in the server and delivered as JPEG.

Next, the server directly sends images in JPEG XL to clients that support it, saving both parties bandwidth and unobtrusively motivating clients to upgrade.

With enough client upgrades, the server starts encoding new images directly in JPEG XL in a hybrid way, that is, still encodes only the JPEG-compatible subset of JPEG XL but also takes advantage of some JPEG XL features like the adaptive edge-preserving filter and saliency progression. Afterwards, you can transcode the resulting JPEG XL to a JPEG that looks similar, but the JPEG XL will have even fewer compression artifacts and will load faster. That’s yet another incentive for clients to upgrade to JPEG XL.

Finally, when (almost) all clients support JPEG XL, you go ahead with full-fledged encoding of JPEG XL, gaining substantial improvements in fidelity, bandwidth, storage, and user experience.

The JPEG Committee plans to submit the JPEG XL standard as a Final Draft International Standard (FDIS) in January 2021. If approved, ISO and IEC will publish it as an International Standard in July 2021. In the meantime, since only editorial changes are allowed with no technical modifications, adoption can start. Cloudinary, which already supports JPEG XL, will be ready when the first browser starts doing that. What a thrill to look forward to!