As the world continually generates vast visual data, the need for effective image recognition technology becomes increasingly critical. But why is image recognition so important? The answer lies in the challenge posed by the sheer volume of images. Raw, unprocessed images and videos can be overwhelming, making extracting meaningful information or automating tasks difficult. Image recognition software acts as a crucial tool for efficient data analysis, improved security, and automating tasks that were once manual and time-consuming.

According to Statista Market Insights, the demand for image recognition technology is projected to grow annually by about 10%, reaching a market volume of about $21 billion by 2030. Image recognition technology has firmly established itself at the forefront of technological advancements, finding applications across various industries. In this article, we’ll explore the impact of AI image recognition, and focus on how it can revolutionize the way we interact with and understand our world.

In this article:

- Understanding AI in Image Recognition

- Advantages of AI-Powered Image Recognition

- Challenges in AI Image Recognition

- Innovations and Breakthroughs in AI Image Recognition

- Getting Started with AI Image Recognition and Cloudinary

Understanding AI in Image Recognition

In recent years, the field of AI has made remarkable strides, with image recognition emerging as a testament to its potential. For example, by leveraging vision AI, systems can now interpret and analyze visual data with unprecedented accuracy, thanks to advancements in machine learning models. While it has been around for a number of years prior, recent advancements in AI algorithms have made image recognition more accurate and accessible to a broader audience. Additionally, the integration of generative AI is opening up new possibilities, enabling systems not only to recognize images but also to generate realistic visuals.

What is AI Image Recognition?

AI image recognition is a sophisticated technology that empowers machines to understand visual data, much like how our human eyes and brains do. In simple terms, it enables computers to “see” images and make sense of what’s in them, like identifying objects, patterns, or even emotions.

At its core, AI image recognition relies on two crucial parts: machine learning models and neural networks. These components, which work together seamlessly, form the foundation of AI image recognition:

- Machine Learning. A subfield of AI that serves as the foundation of image recognition. It equips computers with the ability to learn and adapt from vast datasets, making them progressively adept at recognizing visual patterns. This learning process, similar to human cognitive development, enables the AI to refine its recognition capabilities over time.

- Neural Networks. Complementing machine learning are neural networks, a class of algorithms that simulate the intricate workings of the human brain. Neural networks consist of layers of interconnected nodes, or “neurons,” each of which contributes to visual information processing. These networks excel at extracting complex features from images, allowing AI systems to differentiate between various objects, textures, and even subtleties in color and shape.

The combination of these two technologies is often referred as “deep learning”, and it allows AIs to “understand” and match patterns, as well as identifying what they “see” in images. And the more information they are given, the more accurate they become.

Differences Between Traditional Image Processing and AI-Powered Image Recognition

Understanding the distinction between image processing and AI-powered image recognition is key to appreciating the depth of what artificial intelligence brings to the table. At its core, image processing is a methodology that involves applying various algorithms or mathematical operations to transform an image’s attributes. However, while image processing can modify and analyze images, it’s fundamentally limited to the predefined transformations and does not possess the ability to learn or understand the context of the images it’s working with.

On the other hand, AI-powered image recognition takes the concept a step further. It’s not just about transforming or extracting data from an image, it’s about understanding and interpreting what that image represents in a broader context. For instance, AI image recognition technologies like convolutional neural networks (CNN) can be trained to discern individual objects in a picture, perform face recognition, or even diagnose diseases from medical scans.

Advantages of AI-Powered Image Recognition

While it’s still a relatively new technology, the power or AI Image Recognition is hard to understate. It’s made impacts in several sectors, and for good reason. Let’s cover some of the biggest advantages.

Speed and Accuracy

One of the foremost advantages of AI-powered image recognition is its unmatched ability to process vast and complex visual datasets swiftly and accurately. Traditional manual image analysis methods pale in comparison to the efficiency and precision that AI brings to the table. AI algorithms can analyze thousands of images per second, even in situations where the human eye might falter due to fatigue or distractions.

Image Classification

Image classification is a cornerstone of AI-powered image recognition, enabling systems to categorize and label images with remarkable precision. By training an AI model on large datasets, this technology can distinguish between objects, patterns, or even subtle details within images, including the capability for face recognition.

Real-Time Results

Additionally, AI image recognition systems excel in real-time recognition tasks, a capability that opens the door to a multitude of applications. Whether it’s identifying objects in a live video feed, recognizing faces for security purposes, or instantly translating text from images, AI-powered object recognition thrives in dynamic, time-sensitive environments. For example, in the retail sector, it enables cashier-less shopping experiences, where products are automatically recognized and billed in real-time. These real-time applications streamline processes and improve overall efficiency and convenience.

Scalability

Another remarkable advantage of AI-powered image recognition is its scalability. Unlike traditional image analysis methods requiring extensive manual labeling and rule-based programming, AI systems can adapt to various visual content types and environments. Whether it’s recognizing handwritten text, identifying rare wildlife species in diverse ecosystems, or inspecting manufacturing defects in varying lighting conditions, AI image recognition can be trained and fine-tuned to excel in any context.

Challenges in AI Image Recognition

While AI-powered image recognition offers a multitude of advantages, it is not without its share of challenges.

Security and Privacy

One of the foremost concerns in AI image recognition is the delicate balance between innovation and safeguarding individuals’ privacy. As these systems become increasingly adept at analyzing visual data, there’s a growing need to ensure that the rights and privacy of individuals are respected. When misused or poorly regulated, AI image recognition can lead to invasive surveillance practices, unauthorized data collection, and potential breaches of personal privacy. Striking a balance between harnessing the power of AI for various applications while respecting ethical and legal boundaries is an ongoing challenge that necessitates robust regulatory frameworks and responsible development practices.

Unintentional Biases

Another challenge faced by AI models today is bias. AI recognition algorithms are only as good as the data they are trained on. Unfortunately, biases inherent in training data or inaccuracies in labeling can result in AI systems making erroneous judgments or reinforcing existing societal biases. This challenge becomes particularly critical in applications involving sensitive decisions, such as facial recognition for law enforcement or hiring processes.

Real-World Limitations

The real world also presents an array of challenges, including diverse lighting conditions, image qualities, and environmental factors that can significantly impact the performance of AI image recognition systems. While these systems may excel in controlled laboratory settings, their robustness in uncontrolled environments remains a challenge. Recognizing objects or faces in low-light situations, foggy weather, or obscured viewpoints necessitates ongoing advancements in AI technology. Achieving consistent and reliable performance across diverse scenarios is essential for the widespread adoption of AI image recognition in practical applications.

Innovations and Breakthroughs in AI Image Recognition

Regardless of all the challenges, AI image recognition continues to evolve, marked by notable innovations and breakthroughs in the field. These advancements are reshaping the landscape of computer vision and expanding the horizons of what AI can achieve:

- Evolution of Deep Learning Models. Notably, the evolution of deep learning models, such as CNNs, has been a game-changer. These models have grown in sophistication and efficiency, enabling them to decipher complex visual data with unprecedented accuracy and speed. The development of novel architectures and training techniques has further boosted their performance, making them indispensable in a wide range of applications.

- Integration of Augmented Reality (AR). AR has been seamlessly integrated into AI image recognition, ushering in enhanced recognition experiences. AR overlays digital information onto the real world, augmenting our perception and interaction with the environment. This integration opens exciting possibilities for gaming, retail, and education industries.

- The Rise of Edge Computing. Edge computing brings processing capabilities closer to the data source, allowing for real-time analysis and decision-making. This is particularly valuable in scenarios where instant responses are crucial, such as autonomous vehicles and industrial automation.

- Multi-Modal Learning. AI image recognition is increasingly embracing multi-modal learning, which involves combining information from various sources, such as text, audio, and video, to gain a more comprehensive understanding of the content. This holistic approach enables AI systems to analyze and interpret images in the context of their surroundings and associated information, making them more versatile and adaptable.

- Continual Learning and Few-Shot Learning. Continual learning allows AI systems to accumulate knowledge over time, adapting to changing circumstances without forgetting previous knowledge. Few-shot learning equips AI models to recognize new objects or concepts with minimal training examples, making them highly adaptable to novel situations. These capabilities are vital in applications like robotics and surveillance, where the environment is dynamic and unpredictable.

Getting Started with AI Image Recognition and Cloudinary

Innovations and Breakthroughs in AI Image Recognition have paved the way for remarkable advancements in various fields, from healthcare to e-commerce. Cloudinary, a leading cloud-based image and video management platform, offers a comprehensive set of tools and APIs for AI image recognition, making it an excellent choice for both beginners and experienced developers. Let’s take a closer look at how you can get started with AI image cropping using Cloudinary’s platform.

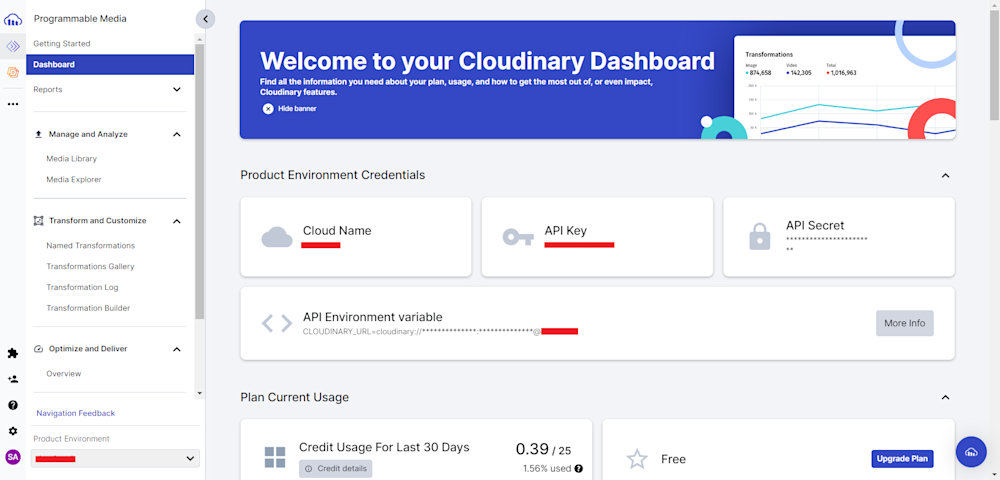

Before we begin, you’ll need to have an active Cloudinary account. If you’re new to Cloudinary, head over to their website and sign up for a free account.

Next, log in to your Cloudinary account and retrieve your Product Enviroment Credentials.

Now, create a Node JS project in a directory of your choosing and install the Cloudinary Node JS using npm:

npm install cloudinary

Create a .js file and start by configuring your Cloudinary SDK with your account credentials:

const cloudinary = require('cloudinary').v2;

cloudinary.config({

cloud_name: "your-cloud-name",

api_key: "your-api-key",

api_secret: "your-api-secret"

});

Remember to replace your-cloud-name, your-api-key, and your-api-secret with your Cloudinary credentials.

Let’s take a look at how you can generate image captions for images. Start by creating an Assets folder in your project directory and adding an image. Here, we will use bike available in Cloudinary’s demo cloud.

Next, create a variable in your project and add the path to the image:

// Specify the path to the image you want to analyze const imageFilePath = 'Assets/bike.jpg';

Finally, use the uploader method to upload the image and set the detection parameter as 'cld-fashion' to analyze the image:

cloudinary.uploader.upload(imageFilePath, {

detection: 'cld-fashion',

auto_tagging: 0.6

})

.then(result => {

console.log(result);

})

.catch(error => {

console.error(error);

});

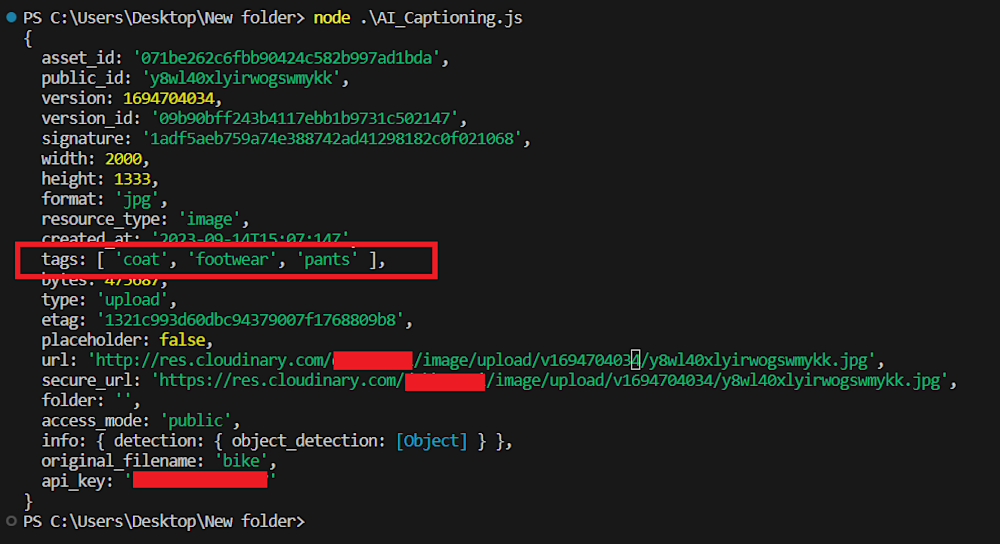

Here are the results:

Wrapping Up

AI’s transformative impact on image recognition is undeniable, particularly for those eager to explore its potential. Integrating AI-driven image recognition into your toolkit unlocks a world of possibilities, propelling your projects to new heights of innovation and efficiency. As you embrace AI image recognition, you gain the capability to analyze, categorize, and understand images with unparalleled accuracy. This technology empowers you to create personalized user experiences, simplify processes, and delve into uncharted realms of creativity and problem-solving.

Whether you’re a developer, a researcher, or an enthusiast, you now have the opportunity to harness this incredible technology and shape the future. With Cloudinary as your assistant, you can expand the boundaries of what is achievable in your applications and websites. You can streamline your workflow process and deliver visually appealing, optimized images to your audience.

Get started with Cloudinary today and provide your audience with an image recognition experience that’s genuinely extraordinary.

Interested in more from Cloudinary?:

- Learn more about Image Recognition

- Discover Amazon Rekognition Celebrity Detection

- Dive into Amazon Rekognition Auto Tagging