Low-latency video streaming has become central to everything from gaming and sports to online classrooms and interactive events. Nothing ruins the experience faster than long delays between what’s happening and what the viewer actually sees. But how does low-latency video streaming work, and how can you set it up into your workflows?

Key takeaways:

- Low-latency streaming reduces the delay between capturing and displaying video to under two seconds, which is crucial for real-time activities like gaming or live sports. It works by sending smaller video chunks more often and using smart technology to keep playback smooth and fast.

- Low-latency streaming reduces the time it takes for live video to reach viewers by sending smaller data chunks more quickly, using advanced protocols like LL-HLS or WebRTC. This helps minimize lag caused by encoding, buffering, and network delays, making real-time experiences like gaming and live sports smoother and more interactive.

In this article:

- What is Latency and Low-Latency Video Streaming?

- The Advantages of Low-Latency Video Streaming

- The Different Low-Latency Video Streaming Protocols

- How to Reduce Streaming Latency

- When Should You Use Low-Latency Video Streaming?

What is Latency and Low-Latency Video Streaming?

Latency is the time it takes for a video signal to travel from the source to a viewer’s screen. In streaming, this delay happens between when a camera captures an image and when that image appears on a device. Every step in this process (capturing, encoding, transmitting, buffering, and decoding) adds a fraction of a second that builds into noticeable lag.

So, what causes low-latency? Network congestion, long transmission distances, and the use of complex codecs can all slow delivery. Traditional streaming protocols such as HLS or DASH were designed for stability and scalability, which can increase delay. As viewer expectations shift toward immediacy, low-latency streaming has become essential for modern video platforms.

Low-latency streaming minimizes this delay to create near real-time video delivery. It keeps live events, sports, gaming, and interactive broadcasts closely aligned with what is happening at the source. By reducing lag, it allows audiences to engage naturally and respond without interruption.

Latency can be defined in one of two ways:

- Glass-to-glass latency: The total time from when the camera lens captures a frame to when that frame appears on a viewer’s display.

- Wall-clock time: The actual elapsed time between a live event and when the viewer sees it, measured using a consistent external clock reference.

How Low-Latency Streaming Works

Low-latency streaming achieves faster delivery by optimizing how data moves through the network. Instead of waiting for large chunks of video to buffer, smaller segments are encoded and transmitted continuously using protocols like Low-Latency HLS (LL-HLS) or WebRTC. These technologies shorten the buffer window, improve connection efficiency, and adjust dynamically to network conditions, resulting in a more immediate and interactive viewing experience.

The Advantages of Low-Latency Video Streaming

Delivering video content without delay transforms how viewers experience live and interactive media. Low-latency streaming ensures that audiences stay connected to the moment, whether they’re watching a product launch, taking part in a virtual event, or engaging with real-time broadcasts. For brands and developers, it means creating more responsive, immersive experiences that strengthen engagement and trust.

Using low-latency streaming offers you:

- An enhanced viewer experience: Audiences see and react to events as they happen, creating a stronger sense of connection and authenticity.

- Improved interactivity: Real-time responses enable smooth communication during live events, Q&As, and interactive broadcasts.

- Higher engagement and retention: Reducing delays keeps users involved longer and encourages repeat viewing.

- Better synchronization across devices: Low latency aligns playback across multiple screens, delivering a seamless experience for distributed audiences.

- Optimized performance for modern applications: From gaming to e-commerce, real-time streaming supports use cases where every second counts.

The Different Low-Latency Video Streaming Protocols

Different streaming protocols power low latency streaming, and each has its strengths. Let’s explore the most common ones:

Low-Latency HLS

Apple’s HTTP Live Streaming (HLS) is widely supported. Its low-latency version reduces chunk sizes and leverages HTTP/2 push to deliver segments faster, while still maintaining HLS’s reliability.

DASH

Dynamic Adaptive Streaming over HTTP (MPEG-DASH) uses shorter segment sizes and faster manifest updates. It’s flexible, but not supported by major browsers. As of 2025, it’s only supported via JavaScript.

WebRTC

Designed for real-time communications like video calls, WebRTC can achieve sub-second latency. It’s peer-to-peer, making it ideal for small interactive sessions but harder to scale for mass broadcasting.

SRT

Secure Reliable Transport (SRT) video streaming is an open-source protocol that optimizes delivery over unpredictable networks. It’s great for contribution feeds (like broadcasters sending video to studios) where stability and speed are both essential.

How to Reduce Streaming Latency

You can use a few approaches to cut down on streaming delays. Here are the most effective ones:

- Use Smaller Segment Sizes: Shorter chunks reduce buffering time.

- Enable Chunked Transfer Encoding: Send parts of a segment before it’s complete.

- Choose the Right Streaming Protocol: Pick HLS, DASH, WebRTC, or SRT depending on your use case.

- Distribute Content via a Low Latency CDN: Deliver streams closer to your viewers.

- Implement Adaptive Bitrate Streaming: Adjust quality dynamically to avoid stalls.

- Reduce Server-Side Processing Time: Speed up transcoding, packaging, and encryption.

- Leverage Edge Computing: Cache and process content closer to your audience.

To significantly reduce streaming delay, we can start by optimizing every stage of delivery. That means using smaller segments, enabling chunked transfer, choosing the best protocol (HLS, DASH, WebRTC, SRT), using a low-latency CDN, implementing adaptive bitrate streaming, minimizing server-side processing, and leveraging edge computing for smarter caching.

Low-Latency Video Streaming through Cloudinary

That alone can make a big difference. But for a simpler, scalable solution, Cloudinary can handle low-latency streaming for you. First, create a free account at Cloudinary and get your cloud name, API key, and API secret from the dashboard.

Next, install the Cloudinary Python SDK using the pip install cloudinary command. Then, we will configure the SDK with our credentials in our Python script:

import cloudinary

import cloudinary.uploader

from cloudinary.utils import cloudinary_url

cloudinary.config(

cloud_name='your_cloud_name',

api_key='your_api_key',

api_secret='your_api_secret'

)

After configuring Cloudinary with your account credentials, the first step is to upload our video and prepare it for HLS streaming. This is done by calling cloudinary.uploader.upload() with the local video file and specifying a streaming profile:

# Upload video and apply streaming profile

response = cloudinary.uploader.upload(

"elephants.mp4", # Local file path

public_id="elephant_vid",

resource_type="video",

streaming_profile="full_hd" # Cloudinary will transcode for HLS

)

print("Uploaded video info:", response)

For example, using "full_hd" for streaming_profile ensures Cloudinary transcodes the video into the formats and resolutions needed for low-latency HLS playback. The response from this upload contains metadata about the video, including its public ID, URL, and status, which we can print to confirm that the upload succeeded.

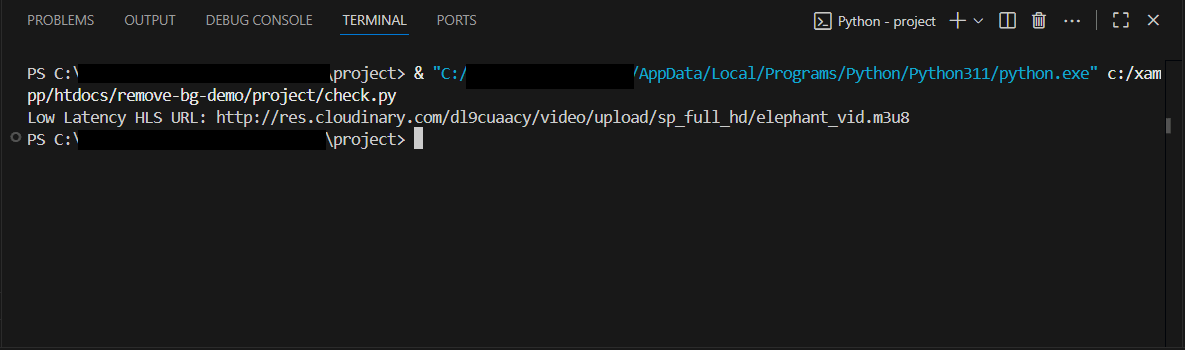

Once the video is uploaded with a streaming profile, we can generate the HLS playlist URL using cloudinary_url(). Here, we will pass the public ID of the uploaded video, set resource_type to "video", choose type as "upload", select the same streaming profile, and set format="m3u8":

url, options = cloudinary_url(

"elephant_vid", # Public ID from upload

resource_type="video",

type="upload",

streaming_profile="full_hd",

format="m3u8"

)

print("Low Latency HLS URL:", url)

Cloudinary then returns a URL that points to the HLS playlist, which references all the small video segments prepared for streaming. Printing this URL allows you to use it in a player or embed it in your web application.

It’s important to note that this .m3u8 URL is not a regular video file and cannot be played directly in most browsers like Chrome. An M3u8 file is a playlist that tells a compatible video player where to find the actual video segments.

To play the video in a browser, you need an HLS-compatible player such as hls.js. Using hls.js, you load the playlist URL into the player, which then fetches and plays the video segments seamlessly, providing smooth low latency video streaming. This approach ensures that Cloudinary’s CDN delivers the content efficiently, while your viewer experiences minimal buffering and lag.

If you’re aiming for true live streaming, such as capturing and broadcasting in real-time, you can pair this with an RTMP client like OBS to ingest live video into Cloudinary. Cloudinary then handles distribution via Low-Latency HLS or other streaming formats.

When Should You Use Low-Latency Video Streaming?

Low-latency video streaming is essential when real-time interaction drives the experience. In online classrooms, fitness sessions, or live Q&As, even a few seconds of delay can make conversations feel awkward and disconnected. The same is true in competitive environments like esports, auctions, or sports betting, where fairness depends on everyone seeing events as they happen.

It also plays a major role in engagement-driven platforms. Services like Twitch and interactive webinars rely on the immediacy of streamers and viewers reacting to each other in real time. Beyond entertainment, low latency is critical in mission-sensitive contexts such as telemedicine, financial trading, or live surveillance, where fast and accurate information directly impacts decisions.

For more passive content like on-demand movies, pre-recorded lectures, or concert streams, higher latency of 10–30 seconds is usually acceptable and can even improve stability. Ultimately, the choice depends on your audience’s expectations and whether immediacy outweighs the need for simplicity and reliability.

Wrapping Up

Low latency video streaming is what makes real-time experiences possible online. By using protocols like Low-Latency HLS, DASH, WebRTC, or SRT, and combining them with smart encoding and CDN strategies, you can deliver streams that feel immediate and engaging.

With Cloudinary, you don’t just get video delivery but also an end-to-end media platform that handles scaling, optimization, and transformations automatically. That means we can focus on building engaging apps, while Cloudinary ensures streams reach global audiences quickly and reliably.

Join Cloudinary today and start exploring how low latency video streaming can transform your projects!

Frequently Asked Questions

What Is Considered Low-Latency in Video Streaming?

Low latency in video streaming typically refers to a delay of less than five seconds between capture and playback. Ultra-low latency streams can achieve delays as short as one second or less, which is crucial for real-time interactivity.

Why Is Low-Latency Important for Live Streaming?

Low latency ensures that viewers experience events almost as they happen. This is especially important in live sports, gaming, online auctions, and interactive sessions, where even a small delay can affect engagement, fairness, or decision-making.

How Can Cloudinary Help Reduce Video Streaming Latency?

Cloudinary simplifies low latency video delivery by automatically optimizing streaming formats like HLS and DASH, distributing content via its global CDN, and handling adaptive bitrate streaming. With just a few lines of code, developers can generate streaming URLs that are fast, reliable, and scalable.