Developers are always looking for new and creative ways to deliver content that resonates with the way users feel. Often using the latest technical innovations the market has to offer such as Artificial Intelligence (AI) and Machine Learning (ML). What better way to demonstrate innovative uses of these technology in a consumer market than capturing expressions from your users and then serving content based on that expression!

In this article we are going to build an app that suggests videos to users based on their facial expressions (i.e. emotion). To do this, we will use Cloudinary’s Advanced Facial Attributes Detection Add-on and the Cloudinary Video Player.

Cloudinary makes adding image and video optimizations to applications a breeze. Head over to the Solutions Page to learn more about the features offered.

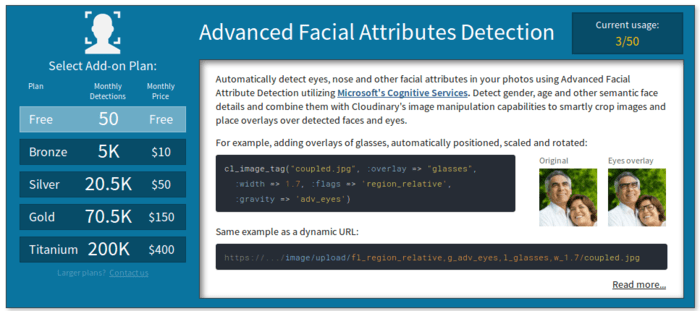

First, sign up for Cloudinary. Once you have created an account, you are able to use the “Advanced Facial Attributes Detection” add-on.

You can add the “Advanced Facial Attributes Detection” to your Cloudinary account here and follow the on-screen instructions.

Make sure to note your API_KEY , API_SECRET and CLOUD_NAME from your developer console. This information is needed when integrating with your application.

Using Cloudinary to obtain the emotion from a facial image requires the Advanced Facial Attributes Detection Add-on when uploading the image. We add the adv_face as a tag to let Cloudinary know we are going to use the Advanced Facial Attributes Detection add-on to register the emotions from the detected users face.

When the image is successfully uploaded, emotions detected from the face and their confidence values are returned as part of the result. The emotion with the highest confidence is then selected.

JavaScript Sample:

cloudinary.uploader.upload( req.body.image , function(result) {

const emotions = result.info.detection.adv_face.data[0].attributes.emotion;

let arr = JSON.parse(JSON.stringify(emotions));

let visible_emotion = getMaxKey(arr); // this function gets the emotion with the highest confidence

return res.json({

status: true,

emotion: visible_emotion

})

},{ detection: "adv_face" });

Code language: JavaScript (javascript)You can then return the detected emotion to the frontend of your application.

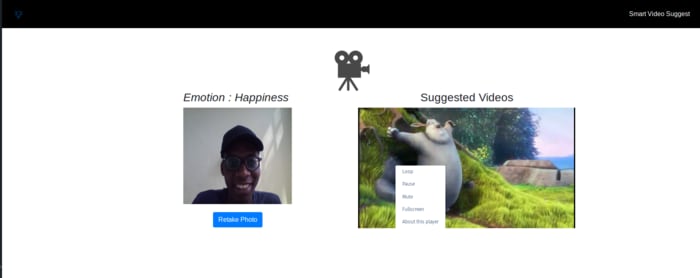

Once the emotion is obtained on the front end, we want to display a set of videos to the user that correspond to the selected emotion. The Cloudinary Video Player makes creating playlists simple. You can create a video player and then populate it with videos from your media library that are tagged with the highest confidence emotion, such as ‘happiness’.

JavaScript Sample:

let emotion = "happiness";

let cld = cloudinary.Cloudinary.new({ cloud_name: CLOUD_NAME, secure: true});b

// initialize video player

let demoplayer = cld.videoPlayer('elementID');

// create playlist based on emotion

demoplayer.playlistByTag( emotion, { sourceParams: {angle:0}, autoAdvance: 0, repeat: true, presentUpcoming: 5});

Code language: JavaScript (javascript)Putting it all together, we have the sample of how it all works below:

In this post, we have shown how to use the facial emotion recognition add-on from Cloudinary to create an application that enables us to serve videos to our users based on how they are feeling at the moment. We also leveraged the Cloudinary Video Player to serve the videos in a playlist format. You can learn more about using the video player and feel free to check out the github repository for the full source code. If you are up for the challenge, clone and submit your own Video Suggestion App below!

- Video Transcoding and Manipulation

- Top 10 Mistakes in Handling Website Videos and How to Solve Them

- How to Compress Video Size Automatically With One Line of Code

- ExoPlayer Android Tutorial: Easy Video Delivery and Editing

- Ultimate HTML5 Video Player Showdown: 10 Players Compared

- How to Generate Waveform Images From Audio Files

- Auto Generate Subtitles Based on Video Transcript

- Auto-Generate Video Previews with Great Results Every Time

- Adaptive HLS Streaming Using the HTML5 Video Tag

- Video Optimization With the HTML5 <\video> Player

- Converting Android Videos to Animated GIF Images With Cloudinary: A Tutorial