What Is an API Rate Limit?

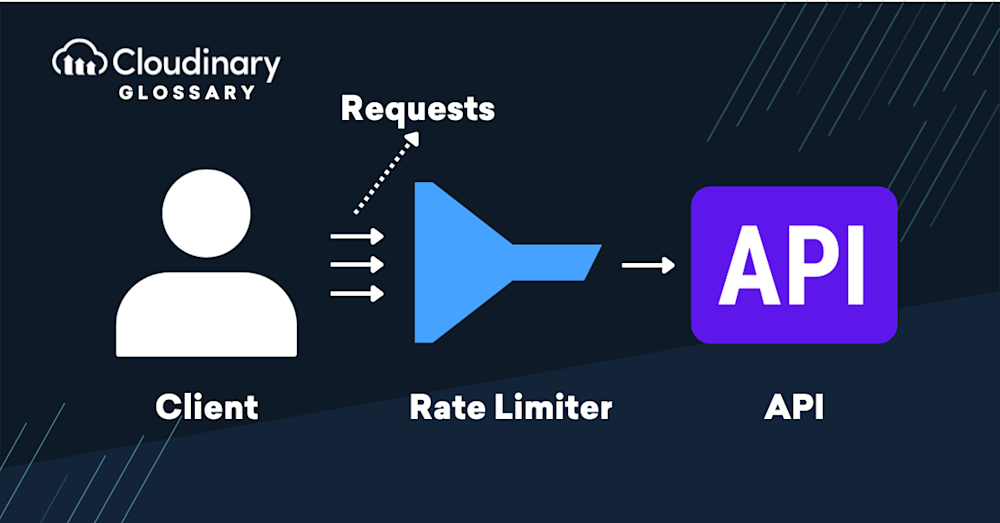

An API rate limit is a restriction imposed by service providers on the number of requests that can be made to an API (Application Programming Interface) within a specific period of time. This is primarily done to maintain a service’s stability, performance, and security by protecting it from excessive usage, spam, or potential denial-of-service attacks. It’s the equivalent of a traffic control system, ensuring the API remains available and responsive for all users.

API rate limits are generally defined by a set quota or threshold, such as the maximum number of requests per minute, hour, or day. When users or applications exceed this limit, their requests may be blocked, delayed, or denied until they fall back within the allowed usage.

These limits can also vary depending on the client identity — for example, a service might assign different thresholds to authenticated users versus anonymous traffic, or apply limits per IP address or API key. Some APIs even use concurrent rate limiting, which restricts the number of simultaneous active requests rather than just the number of requests over time.

Why Does Rate Limiting Exist?

Rate limiting exists to protect an API server from being overwhelmed by high-volume requests and to prevent abuse or misuse. It acts much like a traffic light, allowing a steady traffic flow while maintaining a smooth and orderly user experience. Without rate limiting, an influx of API requests could lead to server crashes and delayed responses, negatively impacting all users. Rate limiting also discourages abusive behaviors, such as scraping or DDoS attacks, ensuring the API remains accessible and valuable for its intended purpose.

It’s also an effective way to shape user behavior. For instance, a social media API might enforce strict limits on POST requests to reduce spam while allowing more generous access to GET requests that retrieve content. This encourages responsible usage patterns and enhances security.

How API Rate Limiting Works

To enforce limits effectively, APIs use rate-limiting algorithms that determine when requests should be allowed, queued, or rejected. Each algorithm has a different way of measuring and controlling request flows:

- Token Bucket Algorithm: This algorithm allows bursts of traffic while enforcing an average rate over time. A “bucket” holds tokens, which get consumed per request. Tokens refill at a fixed rate, so requests can only proceed if tokens are available.

- Leaky Bucket Algorithm: Similar to token bucket but enforces a fixed output rate. Incoming requests are queued in a “bucket” that leaks at a steady rate. If the bucket overflows, excess requests are dropped.

- Fixed Window Algorithm: Counts the number of requests in a fixed time window (like one minute). Once the limit is hit, all further requests in that window are rejected.

- Sliding Window Algorithm: An improvement over fixed window, this method tracks a rolling time frame (e.g., the last 60 seconds), allowing more precise rate control and reducing spikes at window edges.

- Concurrent Rate Limiting: Limits the number of simultaneous requests that can be active at any given moment. This is especially useful for APIs dealing with long-running or resource-intensive operations, where overall throughput is less critical than concurrent processing limits.

Understanding these mechanisms helps you predict behavior and design smarter retry or caching strategies.

What Does “API Rate Limit Exceeded” Mean?

“API rate limit exceeded” is an API error message indicating that the user has exceeded the number of requests allowed within a specific time frame. It is a signal that the user or application should wait before sending additional requests.

In many cases, the server response will include a Retry-After HTTP header, specifying how long the client should wait before retrying. Respecting this header is not just polite — it’s a best practice that prevents further throttling or potential blacklisting.

How To Bypass API Rate Limiting

Rather than strictly “bypassing”, effectively navigating API rate limiting involves a disciplined balance of reasonable utilization and technical tactics. Safely optimizing your API usage within the established constraints follows best practices and respects the service provider’s network resources. Here’s how you can efficiently handle API rate limits:

- Prioritize Essential Requests. Focus on the calls that contribute crucial functionality or data. Limit non-essential requests.

- Optimize Timing of Calls. Spread your requests evenly instead of executing them in large, sporadic batches.

- Implement Caching. Store frequent API responses locally to minimize redundant requests.

- Upgrade Your Access. Many API providers offer tiered packages with higher limits or unlimited access for premium tiers.

- Error Handling and Retry Logic. Implement robust error handling and exponential backoff to gracefully handle situations where the rate limit has been exceeded.

- Respect Retry-After Headers. When a rate limit is hit and a Retry-After header is returned, use it to time your retries appropriately. This prevents aggressive retry loops that can worsen the problem.

- Graceful User Feedback. If a request fails due to rate limits, inform users through appropriate messaging or fallback behaviors. Logging these events can also help track usage patterns or abuse.

Final Thoughts

Understanding and maneuvering through API rate limits are crucial for developers as they leverage the interconnected nature of today’s web services. These limits, while possibly confounding at first, do not only serve to protect the API provider’s network resources but also ensure a smoothly functioning and fair cyber ecosystem.

Developers can effectively navigate these limits through tactics like prioritizing essential requests, spreading out their calls, implementing caching, upgrading access when necessary, and having robust error handling.

For developers seeking a comprehensive cloud-based solution that simplifies image and video management tasks, Cloudinary has you covered. Our platform enables you to easily upload, store, manage, manipulate, and deliver images and videos with responsive APIs and clear rate limits. This improves your application’s performance and enhances the user experience.

Step into a new era of seamless digital content management and start building with Cloudinary today.