Video and audio accessibility

Last updated: Feb-09-2026

Audio and video content presents unique accessibility challenges because the information is presented over time and may not be perceivable by all users. People with hearing impairments need text alternatives like captions and transcripts, while those with visual impairments benefit from audio descriptions that explain visual content. Additionally, users may need control over audio levels or the ability to pause content that plays automatically.

The most accessible approach is often to let users control when media plays. Autoplaying content can interfere with screen readers, startle users, cause motion sensitivity issues, and consume bandwidth without consent.

Best practice: Provide clear controls and let users initiate playback. If autoplay is necessary, mute videos by default and include prominent play/pause controls.

This section covers Cloudinary's tools and techniques for making time-based media accessible, including generating transcriptions, adding captions, creating audio descriptions, and providing sign language overlays.

Video and audio accessibility considerations

| Consideration | Cloudinary Video Techniques | WCAG Reference |

|---|---|---|

|

Think about users who can't hear audio-only content. They'll need text transcripts. For video-only content, consider whether users who can't see the visuals would understand what's happening through text descriptions or audio narration. |

🔧 Alternatives for video only content 🔧 Audio and video transcriptions |

1.2.1 Audio-only and video-only (prerecorded) |

|

Consider users who can't hear your video content. They'll need captions that show not just dialogue, but also sound effects and other important audio cues that contribute to understanding. |

🔧 Video captions | 1.2.2 Captions (prerecorded) |

|

Think about users who can't see your video content. They may need audio descriptions that explain what's happening visually, including actions, scene changes, and other important visual information that isn't conveyed through dialogue alone. |

🔧 Audio descriptions |

1.2.3 Audio description or media alternative (prerecorded) 1.2.5 Audio description (prerecorded) 1.2.7 Extended audio description (prerecorded) |

|

For live content, consider how users who are deaf or hard of hearing will follow along with real-time events. They'll need live captions or transcripts that keep pace with the broadcast. |

🔧 Live streaming closed captions |

1.2.4 Captions (live) 1.2.9 Audio-only (Live) |

|

Consider users who communicate primarily through sign language. They may prefer sign language interpretation over captions for understanding spoken content. |

🔧 Sign language in video overlays | 1.2.6 Sign language (prerecorded) |

|

Consider users who need comprehensive text alternatives for multimedia content. They may prefer complete synchronized text alternatives that provide all the same information as your video or audio content, allowing them to choose the format that works best for their needs. |

🔧 Alternatives for video only content 🔧 Audio and video transcriptions |

1.2.8 Media alternative (prerecorded) |

Alternatives for video-only content

Video-only content (videos without audio) can be made accessible in two main ways: by providing a written text description that conveys the visual information, or by adding an audio track that narrates what's happening on screen.

Video with written description

Videos containing no audio aren't accessible to people with visual impairments. You can provide a text alternative in the form of a description presented alongside a video, which a screen reader can read:

Video description:

The video takes place in a picturesque, hilly landscape during dusk, featuring rocky formations and a clear sky transitioning in colors from blue to orange hues. Initially, a person is seen standing next to a parked SUV. As the video progresses, another individual joins, and they are seen together near the vehicle. The couple, equipped with backpacks, appears to be enjoying the serene environment. Midway through, they share a hug near the SUV, emphasizing a moment of closeness in the tranquil setting. Towards the end of the video, the couple is observed walking away from the SUV, exploring the scenic, rugged terrain around them.

Video with audio description

As an alternative to providing a video with a written description, you can provide the description as an audio track, using the audio layer parameter (l_audio):

Use the button below to toggle the audio description on and off. Notice how the transformation URL changes when you toggle the audio description:

🎧 Audio Description Toggle Demo

Audio Description: Off - Click the button below to toggle the audio description track.

https://res.cloudinary.com/demo/video/upload/docs/grocery-store-no-audio.mp4

Audio and video transcriptions

For people with hearing impairments, you can generate transcripts of audio and video files that contain speech using Cloudinary's transcription functionality.

For audio-only files, you can present the text alongside the audio. For example, for this podcast, you can generate the transcript and display the wording below the file:

Transcript:

Tonight on Quest, nanotechnology. It's a term that's become synonymous with California's high-tech future. But what are these mysterious nanomaterials and machines? And why are they so special? Come along as we take an incredible journey into the land of the unimaginably small.

Types of transcription services

Cloudinary offers multiple transcription options to suit different needs and workflows:

| Service | Key Features | Formats | Learn More |

|---|---|---|---|

| Cloudinary Video Transcription (Built-in service) | • Automatic language detection and transcription • Supports translation to multiple languages • Integrates seamlessly with the Cloudinary Video Player • Includes confidence scores for quality assessment |

.transcript |

🔧 Learn more |

| Google AI Video Transcription add-on | • Leverages Google's Speech-to-Text API • Supports over 125 languages and variants • Provides speaker diarization (identifying different speakers) |

.transcriptVTT SRT |

🔧 Learn more |

| Microsoft Azure Video Indexer add-on | • Advanced video analysis including speech transcription • Supports multiple languages with auto-detection • Provides additional insights like emotions and topics • Generates comprehensive metadata about video content |

.transcriptVTT SRT |

🔧 Learn more |

Transcript formats and usage

Different services generate transcripts in different formats, each suited for specific use cases:

| Format | Description | Best For | Example |

|---|---|---|---|

Cloudinary .transcript |

JSON format with word-level timing and confidence scores. Each excerpt includes full transcript text, confidence value, and individual word breakdowns with precise start/end times. | Advanced Video Player features like word highlighting, paced subtitles, and confidence-based filtering | Example file |

Google .transcript |

JSON format with word-level timing and confidence scores. Similar structure to Cloudinary but generated via Google's Speech-to-Text API with varying excerpt lengths. | Google-specific integrations and applications requiring Google's speech recognition accuracy | Example file |

Azure .transcript |

JSON format with confidence scores and start/end times. Provides transcript excerpts with timing but different structure than Cloudinary/Google formats. | Microsoft Azure integrations and applications requiring Azure's video analysis capabilities | Example file |

| VTT files (WebVTT) | Industry standard web-based caption format with timing cues. Supported by most modern video players and browsers. | Web video players, HTML5 video elements, and broad compatibility needs | Example file |

| SRT files (SubRip) | Simple text format with numbered sequences and timing codes. Widely supported across video editing software and players. | Video editing workflows, legacy player support, and simple subtitle implementations | Example file |

Generating transcripts using the Cloudinary Video Transcription

You can generate transcripts using several methods:

During upload:

For existing videos using the explicit method:

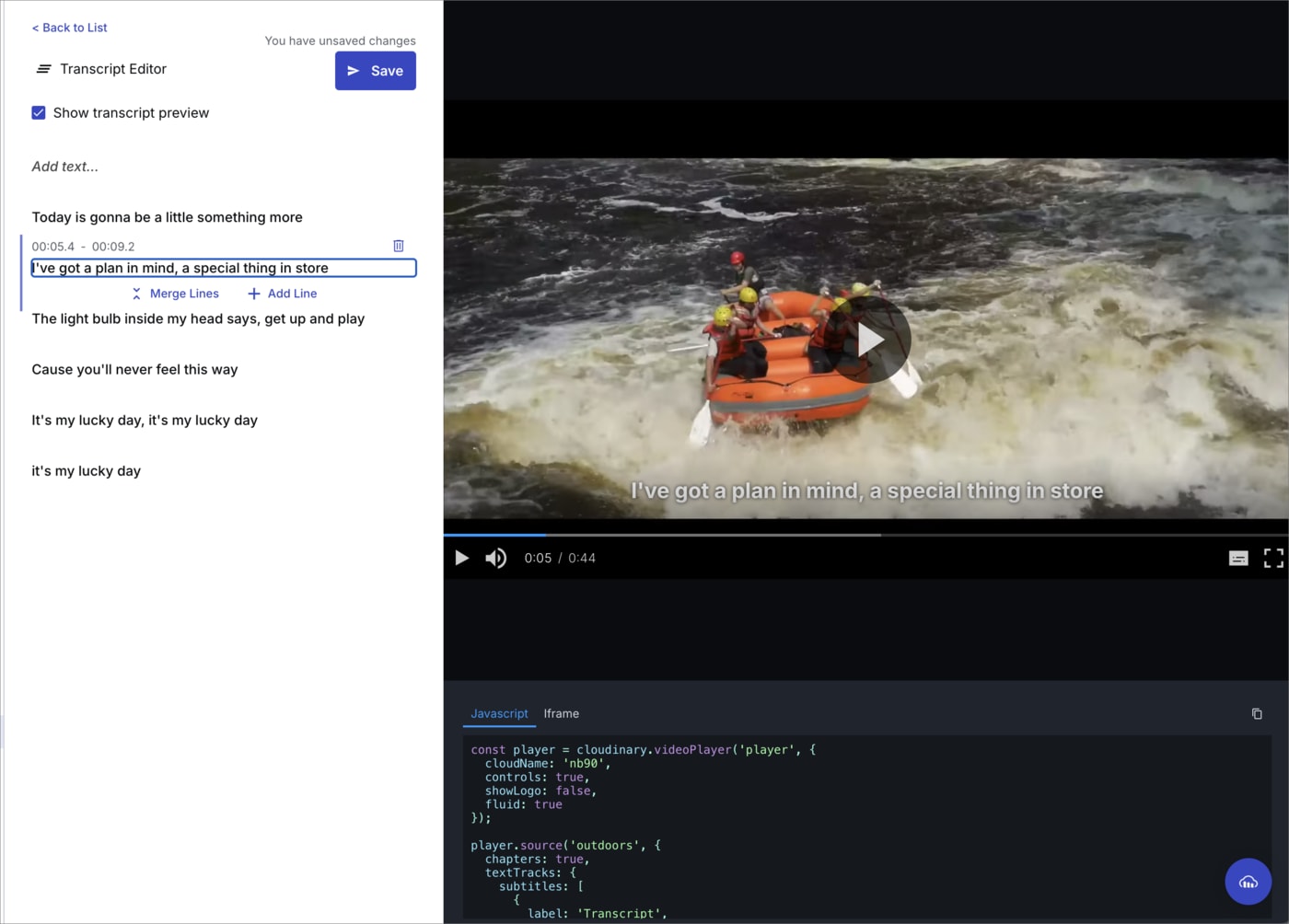

From the Video Player Studio:

Navigate to the Video Player Studio, add your video's public ID, and select the Transcript Editor to generate and edit transcripts directly in the interface.

The transcript editor provides a user-friendly interface for:

- Generating transcripts for existing videos

- Editing transcript content to ensure accuracy

- Adjusting individual word timings

- Adding or removing transcript lines

- Reviewing confidence scores for quality assessment

- Docs: Video transcription

Video captions

For prerecorded audio content in synchronized media you can supply captions. Using the Cloudinary Video Player you can display your generated transcriptions in sync with the video.

Having generated your transcription, to add it as captions to your video, set the textTracks parameter at the source level.

If you use the Cloudinary video transcription service to generate your transcription, as in this example, you don't need to specify the transcript file in the Video Player configuration - it's added automatically.

Here's the configuration for the video above:

Adding VTT and SRT files as captions

To set a VTT or SRT file as the captions (if you use a transcription service other than the Cloudinary video transcription service), specify the file in the url field of the textTracks captions object.

VTT:

SRT:

- Docs: Subtitles and captions

Audio descriptions

You can use the Cloudinary Video Player to display audio descriptions as captions on a video, which a screen reader can read. Additionally, you can set up alternative audio tracks that can provide audio descriptions of a video instead of any audio that's built into the video.

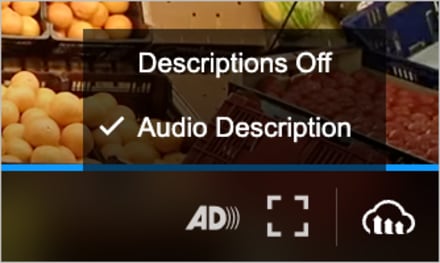

Audio descriptions as captions

When a video has no audio, or no dialogue, it can be helpful to provide a description of the video. You can present this alongside the video, or as captions in the video. Both can be read by screen readers.

In this video, the description appears as captions. Notice the audio descriptions menu at the bottom right of the player, when you play the video, as shown here:

Play the video to see the captions:

To set audio descriptions as captions, which can be read by screen readers, use the descriptions kind of text track:

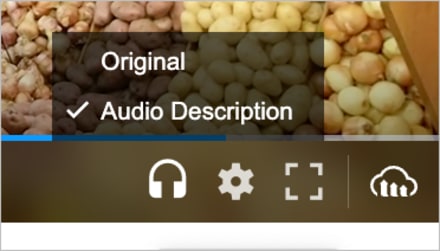

Audio descriptions as alternative audio tracks

A different way of providing a description of a video is to provide an alternative audio track. In this video the description is available as an audio track, which you can select from the audio selection menu at the bottom right of the player when you play the video, as shown here:

Play the video and switch to the audio description:

To define additional audio tracks, use the audio layer transformation (l_audio) with the alternate flag (fl_alternate). This functionality is supported only for videos using adaptive bitrate streaming with automatic streaming profile selection (sp_auto).

Here's the transformation URL that's supplied to the Video Player:

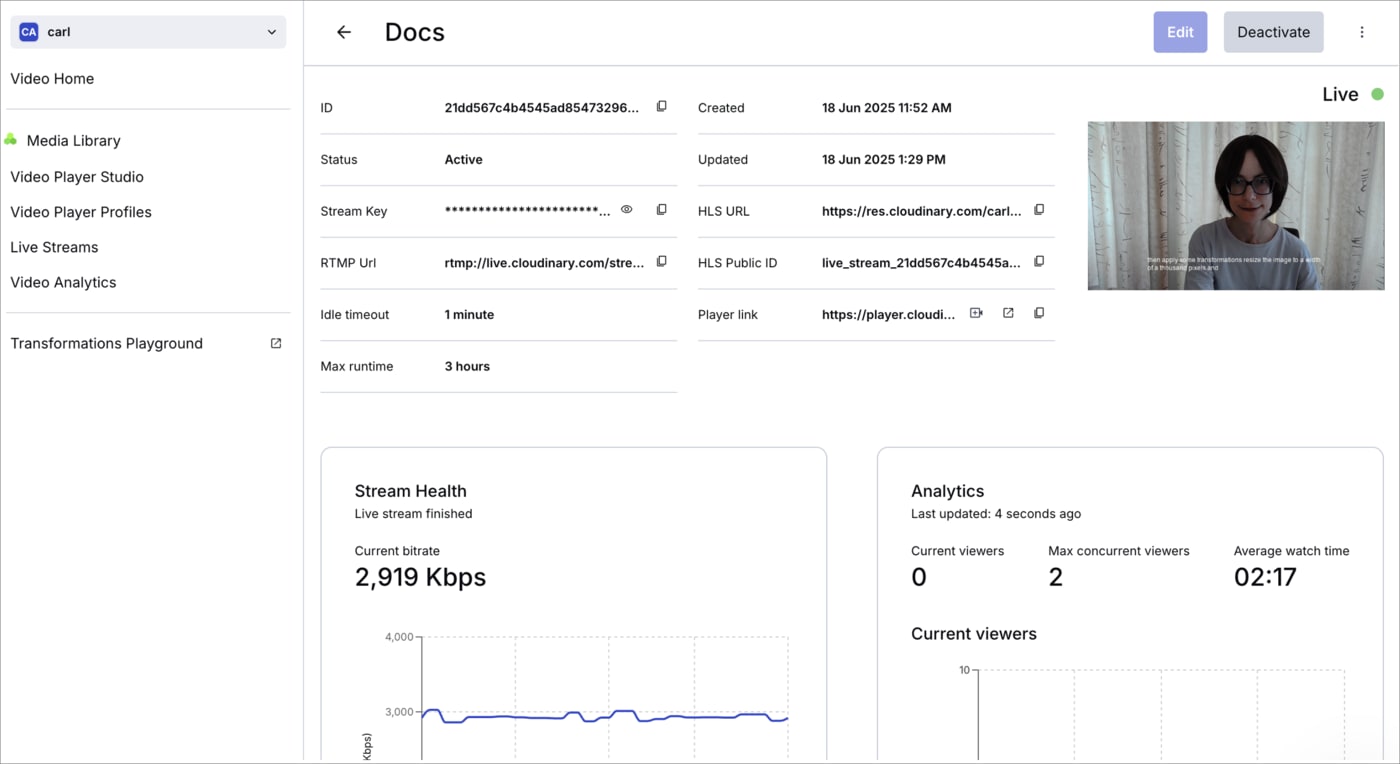

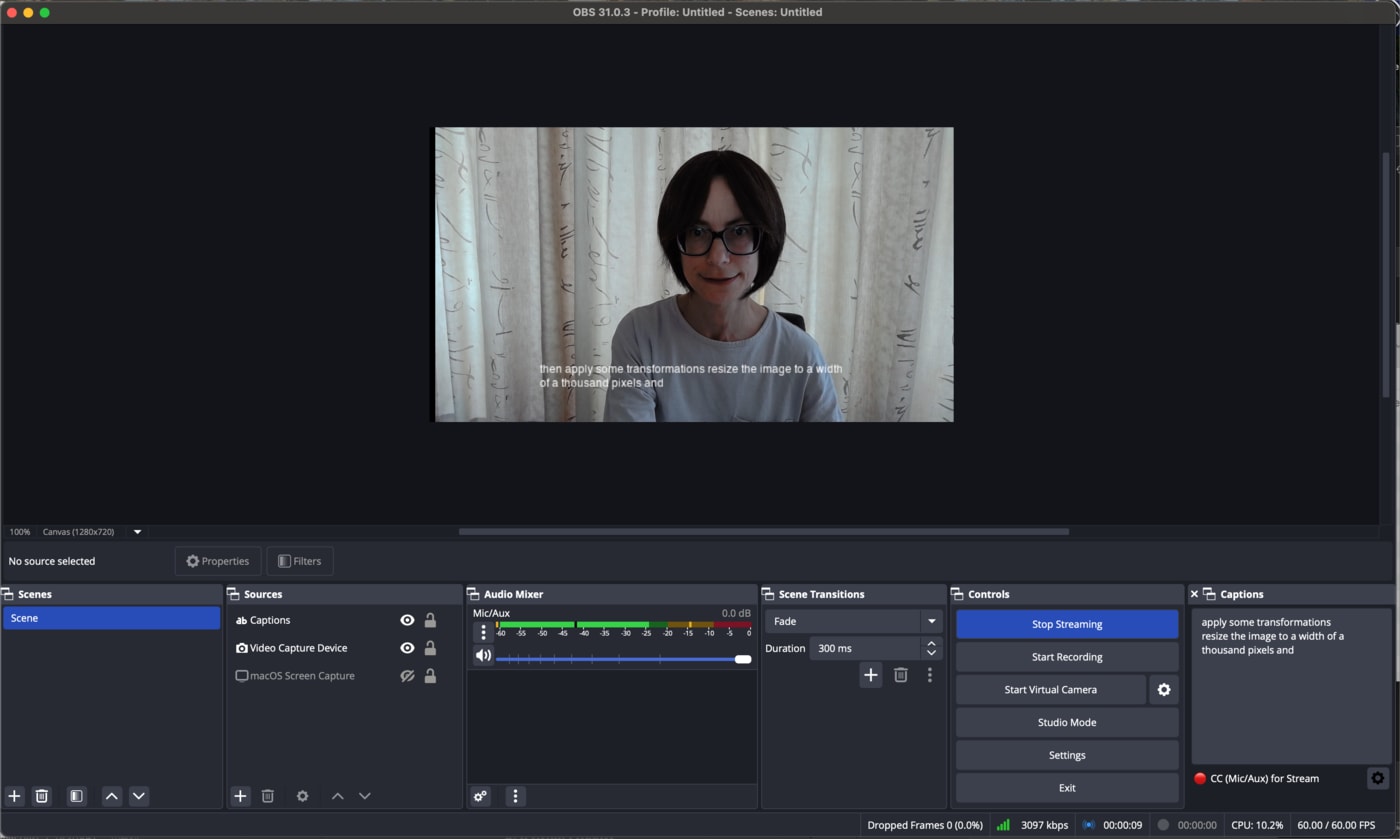

Live streaming closed captions

Add closed captions to your live streams using your preferred streaming software. Cloudinary's live streaming capabilities include support for captions embedded in the H.264 video stream using the CEA-608 standard.

- To begin streaming, navigate to the Live Streams page in the Console and create a new live stream by providing a name. You can also configure caption channels via the API. Once created, you'll receive the necessary streaming details:

- Input: RTMP URL and Stream Key – Use these credentials to configure your streaming software and initiate the live stream.

- Output: HLS URL – This is your stream's output URL, which can be used with your video player. The Cloudinary Video Player natively supports live streams for seamless playback.

- Add closed captions to the stream using your streaming software.

Sign language in video overlays

To help deaf people to understand the dialogue in a video, you could apply a sign-language overlay.

- Upload the video(s) of the sign language and the main video to your product environment.

- Use a video overlay (

l_videoin URLs) with placement (e.g.g_south_east) and start and end offsets (e.gso_2.0andeo_4.0). - Optionally speed up or slow down the overlay to fit with the dialogue (e.g.

e_accelerate:100).

In this example, there are two overlays applied, one between 2 and 4 seconds, and the other at 6 seconds (this one is present until the end of the video so no end offset is required):

Other options to try:

- Consider making the background of the signer transparent to see more of the main video (e.g.

co_rgb:aca79d,e_make_transparent:0as a transformation in the video layer). This works best if the background is a different color to anything else in the video. - Fade the overlay videos in and out for a smoother effect (e.g.

e_fade:500/e_fade:-500).

- Docs: Video overlays

- Docs: Video transparency

Ask AI

Ask AI